OpenAI is rolling out new voices with Advanced Voice Mode to ChatGPT Plus and Team users on the app, in addition to the previous voices available. Advanced Voice Mode (AVM) will also get a new look to give it a more homely and welcoming feel. The upgrade still doesn't come with some unfulfilled promises since GPT-4o launched in May.

With Advanced Voice Mode users can have real-time conversations with GPT. It is an app-only feature and one of the reasons the ChatGPT app has been soaring high on the charts in the last few months since GPT-4o launched. And OpenAI is making the conversations users have with it more natural.

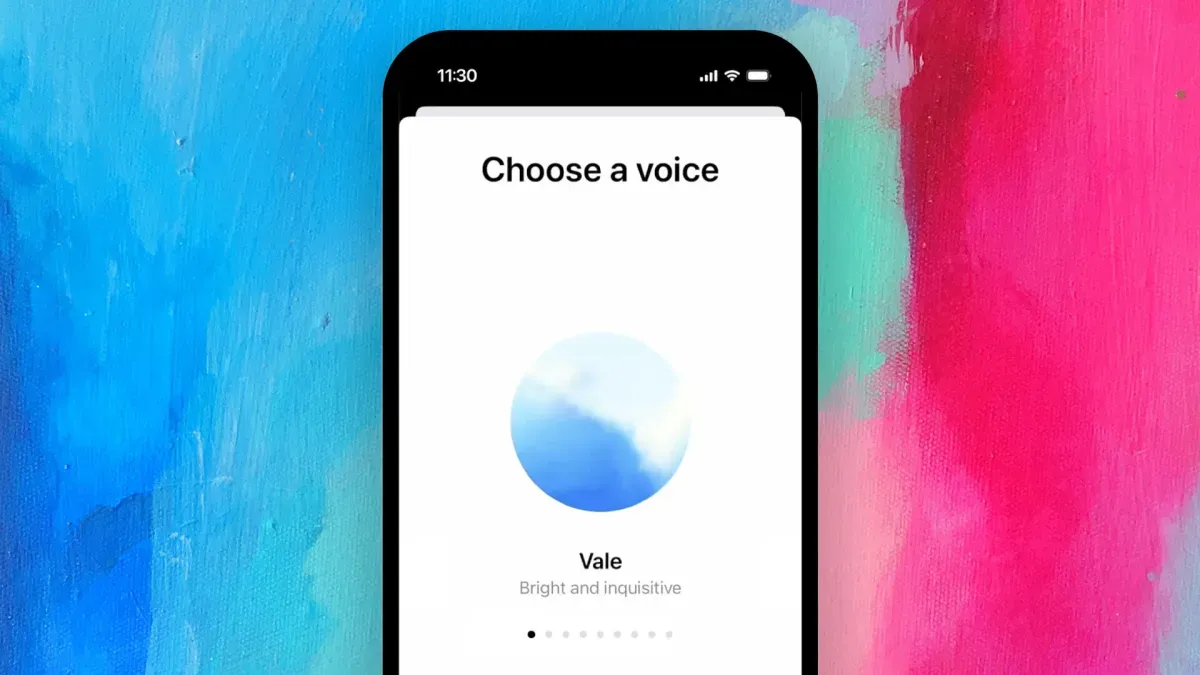

OpenAI is replacing the black dot animation users get when interacting with GPT in Advanced Voice Mode with a blue animated sphere. Considering that AI doesn't exactly have a face or standardised symbol yet, OpenAI is sticking with their mysterious-looking round shape. The animation runs when a conversation is ongoing between the user and ChatGPT, it looks more immersive and homely than the animated dots.

The new voices are not only varied voices that people can pick from but also have improved conversational and comprehension abilities to make conversations more natural. The added voices - Arbor, Maple, Sol, Spruce, and Vale - now make a total of nine voices on ChatGPT, including Breeze, Juniper, Cove, and Ember. One voice that still isn't here is Sky.

OpenAI had to remove the voice of Sky following the controversy over it sounding very much like the celebrity actress Scarlett Johansson. Scarlett claimed OpenAI stole her voice of which the company denies, even despite many obvious similarities between Sky and Samantha, the virtual assistant played by Scarlett in the film Her.

https://x.com/OpenAI/status/1838642444365369814

When OpenAI demoed GPT-4o in May, they showed its multimodal capabilities, like when it was used to solve a math problem written on a piece of paper. It could analyse and process the video feed alongside the user's vocal requests simultaneously. That hasn't been rolled out yet, even now with these other features coming to Advanced Voice Mode on the app. And OpenAI hasn't given any word on when they will push out the multimodal features.

And besides understanding accents better, OpenAI is bringing some of ChatGPT's customisation to AVM, like Custom Instructions and Memory. Custom Instructions allows users to personalise how ChatGPT responds to them, and it is most useful when a more tailored experience is preferred. And Memory helps ChatGPT bring back things from previous conversations for references. Having such features in Advanced Voice Mode takes things up a notch.

The new Advanced Voice Mode features will first roll out to ChatGPT Plus and Teams users, then it will be pushed to Enterprise and Edu customers in the following week.

Monitize your content on Hive via InLeo and truly own your account. Create your free account in a few minutes here! Here's a navigation guide.

Thumbnail by OpenAi, edited by TechCrunch