Above is an image I've titled "Head in the Clouds". I was encouraged by @castleberry to detail my process so the below explains how this piece came into being over the last few months.

A while ago I discovered this image on Twitter. This was above and beyond the pieces I had been able to achieve with VQGAN and CLIP + Diffusion.

With VQGAN I was able to achieve heavenly like patterns like the images below:

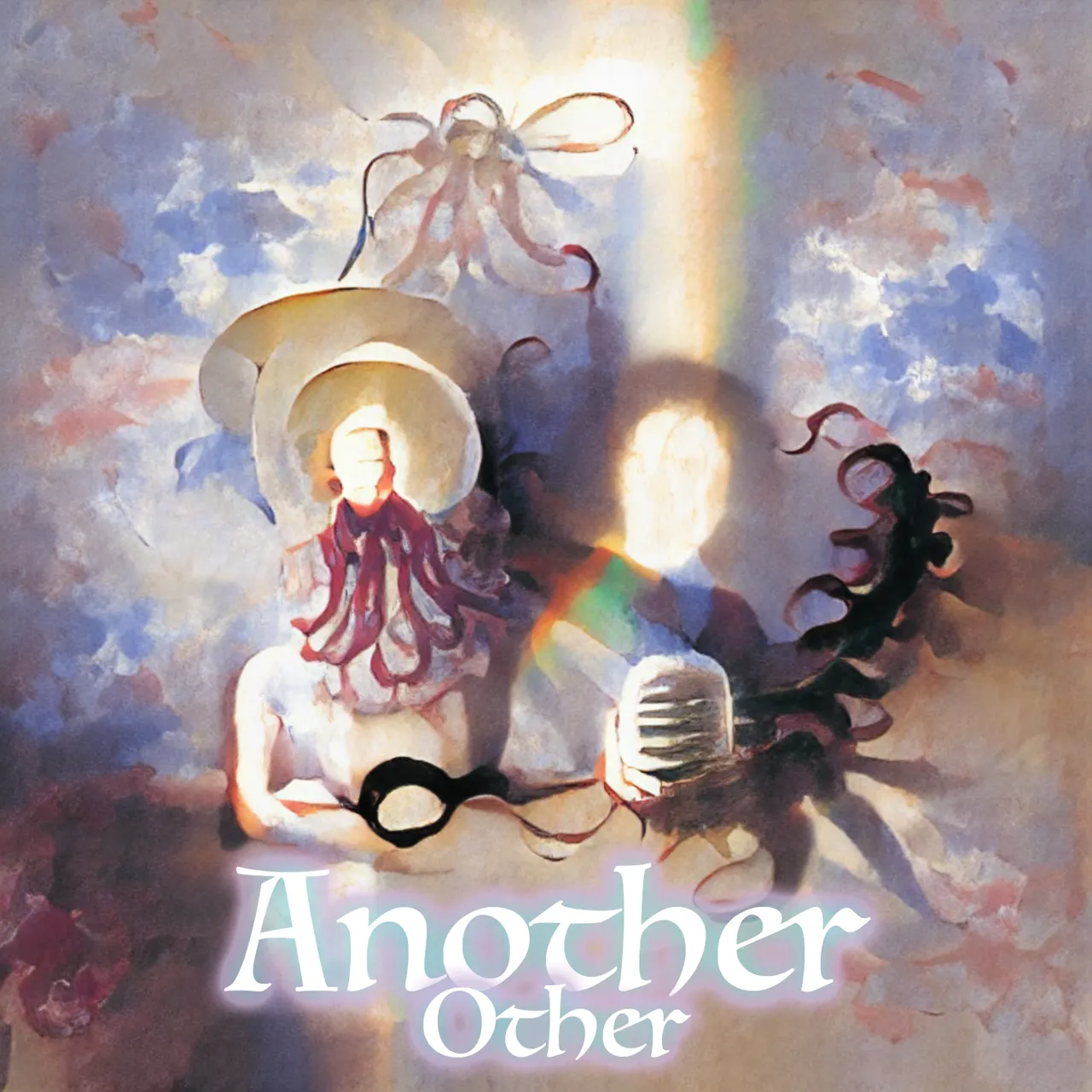

I was also able to render vague characters with a bit of effort like I did for the cover art for my single Another Other:

If you haven't seen the music video for Another Other it was also created with AI.

But I really felt held back by VQGAN. I was able to get access to CLIP + Diffusion and with that I rendered some fantastic landscapes and vaguely heavenly figures.

But it kept confusing oraphim with seraphim! Terrible, absolutely unusable technology. Also I think the "midwest" in this prompt caused it to draw the angel somewhat like a turkey. Who is to say.

Back to the original piece:

My first thought was that there was no way this wasn't prompted by "head in the clouds" but according to the creator this art was created using the phrase "cloud from FF7 4K".

Either way there was a level of consistency here that I was yet to see with CLIP + Diffusion. After a lot of work and refinement I figured out how to render characters instead of throwing abstract patterns on a canvas. I figured out some level of consistency, but that beautiful head in the clouds was still out of my reach.

I know this looks great but for every one composed image there are nine that are just random features thrown on a canvas.

So I reached out the the creator of the piece and they told me they were the main funder of the open source AI research collective Eleuther

He told me he was doing multiple passes, meaning the output of the model needed cleaning up and facetuning by photoshop and other services. But he also gave me an invite to the midjourney beta. I am not sure how Eleuther and midjourney are connected.

So I got on midjourney and making characters was a lot easier but landscapes suddenly became more difficult. To see how I work with midjourney to create characters read this post.

Fast forward a few months and my friend @kaliyuga posts the following

I really love her work and it felt like a synchronous moment so I reached out and asked her if there was some secret term that was shaping it to have this style. She told me and I tried it out but it really was bringing in some color features that didn't vibe with my style.

But her piece did remind me of The Smashing Pumpkins. And I love the Smashing Pumpkins. In particularly it reminded me of the song Tonight, Tonight.

And that became my bridge to what I was looking for. As soon as I referenced that song it stated bringing in this wonderful palette of colors and the moon from the video. Tonight, Tonight was of course inspired by Le Voyage dans la Lune (1902). Showing that human inspiration is not that different from the way models reference existing patterns. How many times has the 1-4-5 chord progression been used in rock music?

At any rate, the first outputs looked like this:

Okay sure, they're kind of ugly. But the colors are nice, and that particular cloud shape that appeared in the two pieces that inspired me was there. I knew I was close in "latent space". I just had to take a step in the right direction.

A few dozen hops and skips and I landed here:

And then from there I landed here:

As you can see the bottom right corner matches the cover of this blog. But it still needs upscaling.

From this point there are two steps of upscaling. And during each step of upscaling there will be semantic mutation. A good example of semantic mutation is this:

The top is the original render. The second is the upscale. In the upscale, when cuts are taken of the image and the jellyfish are sampled, there is some interpretation that these jellyfish look like clouds of sand. And therefore they are painted more like clouds of sand. A lot of features are being actively reinterpreted in the upscale. This is not really what we want unless we are trying to introduce entire new features like painting a mouth in the previous post. There is a nice new bubble imagined but I was not looking for that.

With disco diffusion you can limit the amount of mutation that is occurring per step. You can ensure that the upscaled image is continuously primed by the lower scale image in whatever balance you want, so you can control mutation. But with midjourney you can't which is frustrating.

So you can only take multiple branching pathways of upscaling and just hope in the two passes you can preserve the features your mind perceives in the small scale version while adding new details when necessary.

This is a long laborious process. I might for example be temped to settle on this:

But it just didn't feel right so I kept going until I found this:

Keep in mind there were about 20 or 25 renders between these two final steps. And sometimes the model just fights you and won't output anything coherent. Sometimes I just give up because I'm not happy even though it's 95% of the way there. It's easy to just show these two steps and think how easy it would be to change with a digital brush stroke.

But with AI you're sketching with patterns. Painting with layers of hallucination. Through that you can do almost anything with time and patience and being able to learn and listen to the model.

And there is my long journey from inception of idea, to realization over about three months.

It's interesting because if you trace back to the original piece, the creator claims they had no intention to create a female head in the clouds. But I saw that and the pattern just... stuck in my mind. It took root and started to grow. When I saw Aeolus by @kaliyuga I knew I had to manifest what I was feeling before.

Going into these things, you have these expectations about the associations between words and visual patterns. Quite often you learn that the way that the collective describes the visual patterns you perceive in a very different way. In the process of learning what language the model speaks you're learning the patterns of the collective whole.

There's something magical about that.

This piece could ultimately not exist without my love for a 90's alternative rock band and the fact that its founder was obsessed with Le Voyage dans la Lune. Does this piece look like cloud from FF7? Does it look like @kaliyuga's Aeolus? Does it look like Tonight, Tonight?

Kind of sure. But it's also its own thing. And I tried to make it 30 or 40 times and failed. So there was definitely an intense intent behind it.

I studied psychology in college. I wanted to understand how the brain works. And so when deep learning started to do things that only the brain did I spent a decade studying machine learning. I see the pattern deconstruction and reconstruction laid bare because that was my job for years. And because I'm also an artist that semantic construction feels very similar to my natural process of creativity.

I will tell you this. When I have a creative block I simply can not make anything good with AI. There is something in this image that is a reflection of self and came from within me. And yet it was made externally with math.

I think thats strange for some. To imagine that math can create beauty. But they forget it's math in iterative relation to a being that experiences the rich vast diversity of reality. It comes from a person with an entire lifetime of experiences that informs each piece they create.