It is frustrating how little control we have over the tools and data in our lives ...

BUT

As a developer we can often get around the lack of convenience with a bit of time, knowledge and effort.

In my case today I decided I was going to clean up my Watch Later playlist on YouTube.

My ADHD means that, just like browser bookmarks I will never get to read, my WL is full of stuff I will never watch with titles like "What's new in 2021".

There is no "nuke and start over option", but apparently using Google Takeaway you can request a download.

So I looked into the API and it SEEMED to do everything I needed.

Except they don't give you access to the Watch Later via API!

A privacy thing maybe?

Weird.

Enter Selenium!

But wait, Google blocks Selenium from logging in.

Damn.

Even with 2FA turned off?

Well the solution, as you might have already guessed, is to not automate the logging in part. This also has the nice benefit of using any passkey or authentication protection you like, and to be able to log into any Google account!

We simply tell our script to wait for active confirmation that it is safe to continue.

Another wrinkle is the infinite scroll. We might have been able to get around that using JS or CSS snooping, but checking for no changes plus a timeout seems to work.

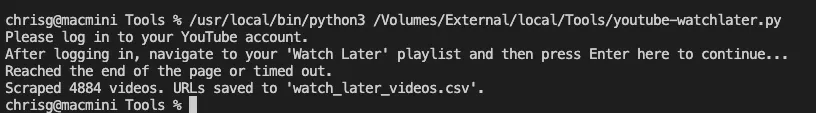

So here for your delight is a Python script that uses Selenium to download your Watch Later list to a CSV, I will follow up with code to then use that CSV list ...

import time

import csv

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

# Function to wait for user to log into YouTube

def wait_for_manual_login(driver):

driver.get('https://www.youtube.com/')

print("Please log in to your YouTube account.")

input("After logging in, navigate to your 'Watch Later' playlist and then press Enter here to continue...")

# Function to scrape Watch Later videos

def scrape_watch_later(driver):

driver.get('https://www.youtube.com/playlist?list=WL')

time.sleep(2)

# Scroll to the bottom of the page to load all videos

last_height = driver.execute_script("return document.documentElement.scrollHeight")

new_height = last_height

start_time = time.time()

timeout = 60 # seconds

while True:

driver.execute_script("window.scrollTo(0, document.documentElement.scrollHeight);")

time.sleep(2)

new_height = driver.execute_script("return document.documentElement.scrollHeight")

if new_height == last_height:

# Check if the timeout has been reached

if time.time() - start_time > timeout:

print("Reached the end of the page or timed out.")

break

else:

start_time = time.time() # Reset the timeout counter

last_height = new_height

# Extract video URLs

video_elements = driver.find_elements(By.XPATH, '//*[@id="video-title"]')

video_urls = [video.get_attribute('href') for video in video_elements]

return video_urls

# Function to save URLs to a CSV file

def save_to_csv(video_urls, filename='watch_later_videos.csv'):

with open(filename, mode='w', newline='') as file:

writer = csv.writer(file)

writer.writerow(['Video URL'])

for url in video_urls:

writer.writerow([url])

# Main function

def main():

options = uc.ChromeOptions()

options.add_argument("--start-maximized") # Start the browser maximized

driver = uc.Chrome(options=options)

try:

wait_for_manual_login(driver)

video_urls = scrape_watch_later(driver)

save_to_csv(video_urls)

print(f"Scraped {len(video_urls)} videos. URLs saved to 'watch_later_videos.csv'.")

finally:

driver.quit()

if __name__ == "__main__":

main()