Artificial intelligence (AI) has made significant strides in various fields, from healthcare to finance to transportation. With its ability to analyze and process large amounts of data quickly and accurately, it's no wonder that AI has been proposed as a potential tool for psychotherapy. But can AI really replace human therapists in providing mental health treatment?

There are a few different ways that AI could potentially be used in psychotherapy. One approach is through the use of chatbots or virtual assistants that can provide therapy sessions via text or voice interactions. These AI-powered tools are programmed with algorithms and machine learning techniques that allow them to respond to users' questions and concerns in a way that mimics human conversation. They can provide support and guidance on a variety of mental health issues, including anxiety, depression, and stress management.

Another way that AI could be used in psychotherapy is through the use of virtual reality (VR) technology. VR allows users to immerse themselves in a computer-generated environment and interact with it in a way that feels real. This can be especially helpful for individuals who have trouble visualizing or imagining things, as it allows them to experience therapeutic scenarios in a more tangible way. AI-powered VR therapy sessions could be tailored to each individual's specific needs and goals, helping them to work through their challenges and improve their mental health.

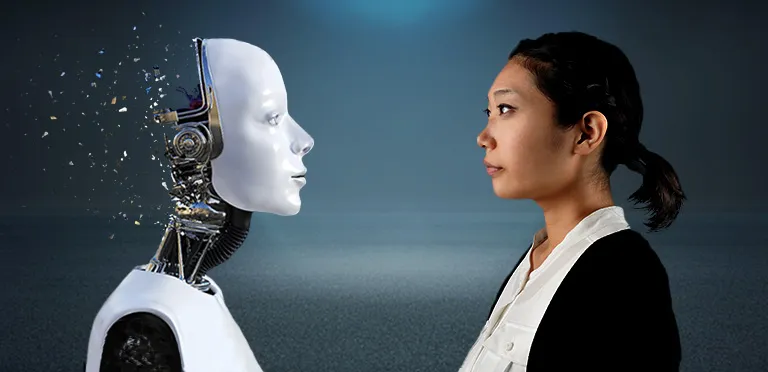

While AI has the potential to be a valuable tool for psychotherapy, there are also limitations to consider. One major concern is the lack of human connection and empathy. Psychotherapy often involves building a relationship with a therapist, who can provide a supportive and non-judgmental space for patients to explore their thoughts and feelings. This can be difficult to replicate with an AI system, which lacks the ability to fully understand and respond to emotional nuances.

Another limitation of AI-based psychotherapy is the potential for bias. AI algorithms are only as good as the data they are trained on, and if the data used to develop the AI system is biased, the AI system will also be biased. This can be especially problematic in the field of mental health, where issues of stigma and discrimination can already be present. Ensuring that AI systems are trained on diverse and representative data is essential to avoid perpetuating harmful biases.

Finally, it's important to consider the potential for AI to replace human therapists altogether. While AI can certainly provide valuable support and guidance, it's unlikely that it will be able to fully replace the human element of psychotherapy. The complex and nuanced nature of mental health treatment requires the ability to understand and respond to individual needs and experiences in a way that AI cannot.

In conclusion, while AI has the potential to be a valuable tool for psychotherapy, it's important to consider its limitations. While it can provide support and guidance, it's unlikely that it will be able to fully replace human therapists. Ensuring that AI systems are trained on diverse and representative data and considering the importance of human connection and empathy will be essential in ensuring that AI-based psychotherapy is effective and ethical.