As many of you have seen the past few months my feed has been various interesting AI outputs. Why is this? I've been using Hive blockchain to train different AI systems in various points of competition. One of these training systems included coding models with GPT 3.5 Turbo, GPT 4 , Our own custom models, and open source ones like Stable diffusion, and bark AI (TTS).

Working with a number of open and free technologies as well as some closed we finally realized coherance with our AI system. The goal was then to take the system from the cloud (using google enterprise level colab A100s all the way down to using only quadcore i5 with 8GB VRAM AMD RX580 gpu) .

I'm proud to announce oneloveipfs (In partnership with freedomdao) accomplished this task; building a localized AI system that can be run without internet or external corporations. Why do this? The flow of information is highly constrained for many people around the world. for example, A rain storm that knocks out the power in Delhi.

Demo

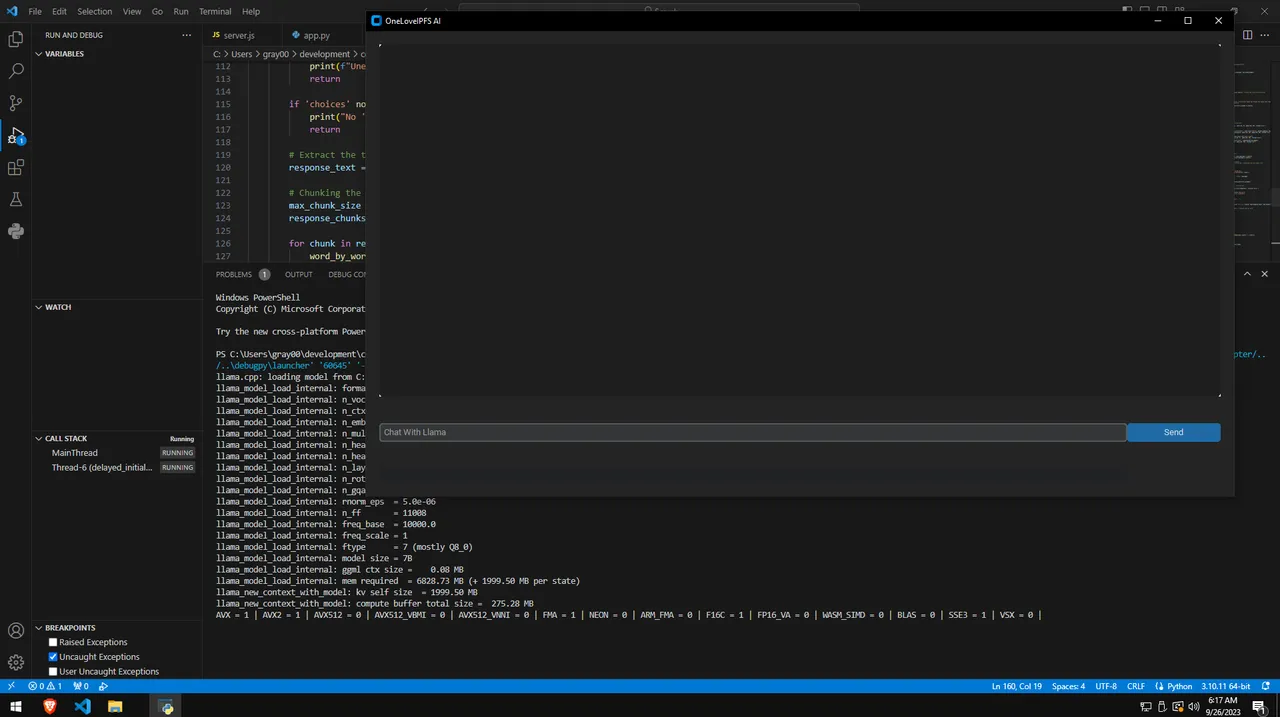

I'd like to demonstrate the processes to build out from scratch, a localized MultiModel (SmartAI) system using Llama2 and Stable Diffusion. For resource constrained devices I will first showcase our (Dual Model) system using Stable Diffusion+Llama2. The next blog will include TTS (Transformers barkAI) for voice.

Download the required software.

python 3.10.11

https://www.python.org/downloads/release/python-31011/

vs build tools with C++ build tools checked when installing

Windows

Automatic Installation on Windows

Install Python 3.10.6 (Newer version of Python does not support torch), checking "Add Python to PATH".

Install git.

Download the stable-diffusion-webui-directml repository, for example by running

git clone https://github.com/lshqqytiger/stable-diffusion-webui-directml.git

Run webui-user.bat from Windows Explorer as normal, non-administrator, user.

https://github.com/lshqqytiger/stable-diffusion-webui-directml

Required pip install

pip install llama-cpp-python==0.1.78 customtkinter requests

Insert this code into gui.py; put gui.py in your automatic1111 install directory, run automatic1111 webui.bat first

from multiprocessing import Process

import subprocess

import threading

import asyncio

import random

import requests

import time

import base64

from datetime import datetime

import io

import sys

from concurrent.futures import ThreadPoolExecutor

from PIL import Image, ImageTk

import customtkinter

import os

import tkinter as tk

from llama_cpp import Llama

script_dir = os.path.dirname(os.path.realpath(__file__))

model_path = os.path.join(script_dir, "llama-2-7b-chat.ggmlv3.q8_0.bin")

llm = Llama(model_path=model_path, n_ctx=3999)

executor = ThreadPoolExecutor(max_workers=1)

async def llama_generate_async(prompt):

loop = asyncio.get_event_loop()

output = await loop.run_in_executor(executor, lambda: llm(prompt, max_tokens=3999))

return output

def word_by_word_insert(text_box, message):

for word in message.split(' '):

text_box.insert(tk.END, f"{word} ")

text_box.update_idletasks()

text_box.after(100)

text_box.insert(tk.END, '\n')

text_box.see(tk.END)

class App(customtkinter.CTk):

def __init__(self):

super().__init__()

self.setup_gui()

threading.Thread(target=self.delayed_initialize_story).start() # Delay the story initialization

def delayed_initialize_story(self):

time.sleep(120) # Wait for 2 minutes (120 seconds)

self.initialize_story()

def initialize_story(self):

story_prompt = "Once upon a time in a faraway kingdom, a mysterious event has thrown the realm into chaos. You, a brave adventurer, have just arrived at the castle gates. What will you do?"

self.text_box.insert(tk.END, f"Story: {story_prompt}\n")

self.text_box.see(tk.END)

threading.Thread(target=self.generate_response, args=(story_prompt,)).start()

def setup_gui(self):

# Configure window

self.title("OneLoveIPFS AI")

self.geometry(f"{1820}x{880}")

# Configure grid layout (4x4)

self.grid_columnconfigure(1, weight=1)

self.grid_columnconfigure((2, 3), weight=0)

self.grid_rowconfigure((0, 1, 2), weight=1)

# Create a label to display the image with a grey background

self.image_label = tk.Label(self, bg='#202225')

self.image_label.grid(row=4, column=1, columnspan=2, padx=(20, 0), pady=(20, 20), sticky="nsew")

# Create text box

self.text_box = customtkinter.CTkTextbox(self, bg_color="white", text_color="white", border_width=0, height=20, width=50, font=customtkinter.CTkFont(size=13))

self.text_box.grid(row=0, column=1, rowspan=3, columnspan=3, padx=(20, 20), pady=(20, 20), sticky="nsew")

# Create main entry and button

self.entry = customtkinter.CTkEntry(self, placeholder_text="Chat With Llama")

self.entry.grid(row=3, column=1, columnspan=2, padx=(20, 0), pady=(20, 20), sticky="nsew")

self.send_button = customtkinter.CTkButton(self, text="Send", command=self.on_submit)

self.send_button.grid(row=3, column=3, padx=(0, 20), pady=(20, 20), sticky="nsew")

self.entry.bind('', self.on_submit)

def on_submit(self, event=None):

message = self.entry.get().strip()

if message:

self.entry.delete(0, tk.END)

self.text_box.insert(tk.END, f"You: {message}\n")

self.text_box.see(tk.END)

threading.Thread(target=self.generate_response, args=(message,)).start()

threading.Thread(target=self.generate_images, args=(message,)).start()

def generate_response(self, message):

# Capture the last 18 lines from the text box for context

all_text = self.text_box.get('1.0', tk.END).split('\n')[:-1] # Removing the last empty line

last_18_lines = all_text[-18:]

# Add timestamps and [PASTCONTEXT] label

context_with_timestamps = []

for line in last_18_lines:

timestamp = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

context_with_timestamps.append(f"[{timestamp}][PASTCONTEXT] {line}")

# Combine context with new message

full_prompt = '\n'.join(context_with_timestamps) + f'\nYou: {message}'

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)

full_response = loop.run_until_complete(llama_generate_async(full_prompt))

# Ensure full_response is a dictionary and has the expected keys

if not isinstance(full_response, dict):

print(f"Unexpected type for full_response: {type(full_response)}. Expected dict.")

return

if 'choices' not in full_response or not full_response['choices']:

print("No 'choices' key or empty 'choices' in full_response")

return

# Extract the text from the first choice

response_text = full_response['choices'][0].get('text', '')

# Chunking the response

max_chunk_size = 2000

response_chunks = [response_text[i:i + max_chunk_size] for i in range(0, len(response_text), max_chunk_size)]

for chunk in response_chunks:

word_by_word_insert(self.text_box, f"AI: {chunk}") # Update word by word

def generate_images(self, message):

url = 'http://127.0.0.1:7860/sdapi/v1/txt2img'

payload = {

"prompt": message,

"steps": 9,

"seed": random.randrange(sys.maxsize),

"enable_hr": "false",

"denoising_strength": "0.7",

"cfg_scale": "7",

"width": 1000,

"height": 427,

}

response = requests.post(url, json=payload)

if response.status_code == 200:

try:

r = response.json()

for i in r['images']:

image = Image.open(io.BytesIO(base64.b64decode(i.split(",", 1)[0])))

img_tk = ImageTk.PhotoImage(image)

self.image_label.config(image=img_tk)

self.image_label.image = img_tk

except ValueError as e:

print("Error processing image data: ", e)

else:

print("Error generating image: ", response.status_code)

if __name__ == "__main__":

# Run your tkinter app

app = App()

app.mainloop()

Install

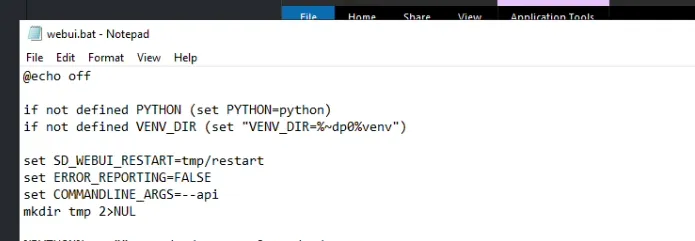

Once you have all the software downloaded. Go into your automatic1111 webui's folder and click "webui.bat" . This will start the process of downloading the required files to our device to run the image model including stable diffusion.

Now you have automatic1111 up and running locally. You need to modify the configuration to support API. Close it down. Modify this file. making sure to add --api to the file to enable the stable diffusion API.

Great. Our image model is set up.

Download the bin component for our language model to run .

https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML/blob/main/llama-2-7b-chat.ggmlv3.q8_0.bin

Save the model into the Automatic1111 directory along with main.py.

Run gui.py

python gui.py

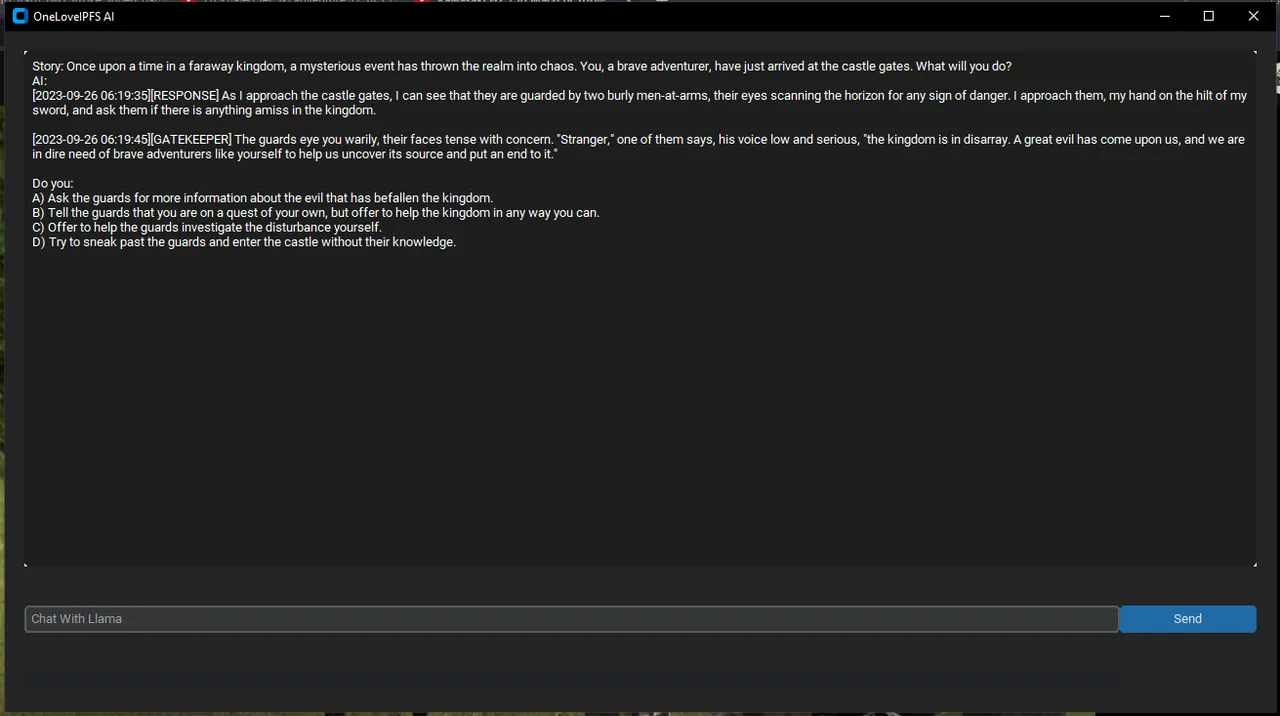

You should see this.

After the timer completes, the game generation will start locally using local LLM llama2 from the bloke along with stable diffusion.

You can change the prompt of the AI to be anything you like. Right now it's setup as an adventure game.

To change the prompt modify this line " story_prompt = "Once upon a time in a faraway kingdom, a mysterious event has thrown the realm into chaos. You, a brave adventurer, have just arrived at the castle gates. What will you do?"

Thank you for reading

repos;

https://github.com/graylan0/mode-zion

https://github.com/graylan0/llama2-games-template/blob/main/llama2-adventure-game.py

https://github.com/lshqqytiger/stable-diffusion-webui-directml

https://github.com/TomSchimansky/CustomTkinter

https://github.com/abetlen/llama-cpp-python

Game Demo: