The last time I communicated on the concept of self governance it was in; "Quantified Self, The Reputation Economy, and AI enabled Self Governance". This time I will provide a bit more detail on how self governance works and how I envision possible technical aspects of this reputation economy.

The concept of stakeholders in the context of self governance

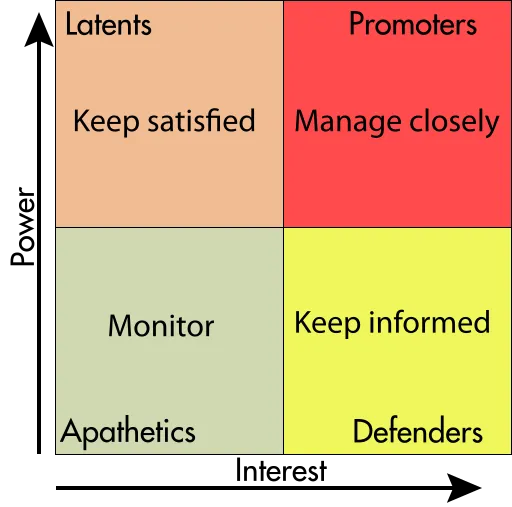

In self governance just as businesses/corporations have what is called "shareholders"; an individual has "stakeholders". These stakeholders are anyone who cares what happens to that individual, anyone who is taking a position on the success of the individual, anyone in the individual's corner. This could be friends, colleagues, family members, anyone who depends on the individual or who may depend on them in the future. So this could be a quite large group and typically an individual may not even know all of who these people are.

Why public opinion matters

Public opinion determines reputation. A person who has a group of stakeholders should and must care what his or her group of stakeholders thinks. These stakeholders ultimately are the supporters behind the individual. If we are talking about books then these are the most loyal fans who buy every book. If we are talking about family then these are the people who will nurse the individual back to health or whom the individual feels the most affinity toward. In general, the idea is each person has a core group which may be between 50-200 people and this core group generally make up the web of influencers on the behavior of the individual. Many individuals care about the opinions of their core group more than anything else in life.

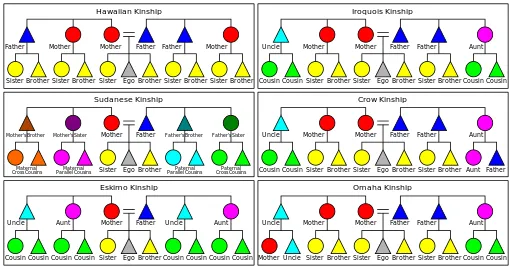

So while public opinion does determine reputation; in specific and in context it can include but is not limited to the reputation within what is known as the "kinship group" which makes up the center of gravity.

See image example:

By ZanderSchubert [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)], from Wikimedia Commons

This has been proven in scientific literature as evidence supports contract enforcement can be facilitated through social networks. Contract enforcement based on honor and reputation is possible when those involved are considered "socially close". To think of this in a different way, self governance in the context of feedback would mean that Alice can receive a real time measurement of how her stakeholders might react to a particular decision she is contemplating based on their shared values or common values. So in a certain way, having the ability to do moral analysis or run a moral simulation is the machine computational equivalent of a conscience. That is to imagine how certain people might react through mental visualization, and to map out potential reactions of different important stakeholders. This is something which can be facilitated in a more automated and computerized fashion as technology allows for moral analysis.

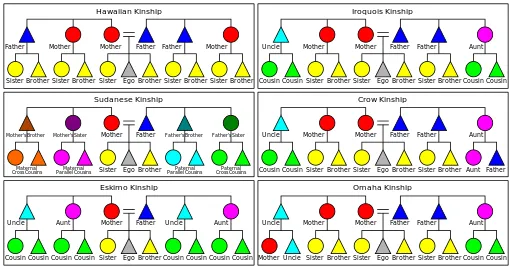

What is stakeholder analysis and how does it contribute to moral analysis?

By Zirguezi [CC0], from Wikimedia Commons

Stakeholder analysis is to assess a decisions potential impact on your stakeholders. Perhaps one of the reasons morality does not scale up is due to the inability (Dunbar's number again) of a person to manage this extremely computationally expensive task. To consider how five people might reaction to a decision may be realistic but this quickly becomes a complexity bomb when 5 becomes 500. Who can actually use their imagination to go into the different perspectives of 500 different people? This is in my opinion never going to scale and because it cannot scale is one of the reasons why I make the audacious claim that morality doesn't scale. It is true that people can perhaps try to be moral when it's half a dozen to a dozen people in a room, but when it's 500 people, then 5000, then 50,000, well the more people you add the more the combinatorial complexity explodes. It's simply not feasible for the same reason it's not feasible in chess to make an optimal move on a board with infinite squares.

Stakeholder analysis can use big data as an input. The big data collected from all stakeholders gets processed and analyzed. This then produces scores representing the results on a spectrum. These scores can then be used to try to predict or simulate (with varying accuracy) how 5 stakeholders may react (based on their interests), or 10, or 100, but even using computers this will not scale up infinitely. It must be noted that this is an open problem in computer science as far as I know, as there is no optimal solution to scale of the analysis but the fact is, at least this way you get at least some ability to see glimpses of the big picture.

These glimpses can allow for moral analysis to take place. That is if you know for sure something is extremely controversial to your stakeholders but you know for sure if you do it that it will lead to the consequences which is best for the majority of your stakeholders, then it is true moral analysis that you can produce numbers which will back up an argument. For example purchasing a certain product or service might not seem to make sense but if you can show that it will over a long period of time benefit all stakeholders then you can make this case in a quantifiable way.

Summary

- Individuals have stakeholders (people who take an interest in the future of that individual).

- An Individual's stakeholders may include but are not limited to being within the kinship group (this can include friends, colleagues, lovers, immediate family, or complete strangers acting as benefactors such as with patreon).

- Individual's when making a decision often try to determine how it might impact each of these stakeholders (supporters) because to not do so could result in hurting their own long term interests.

- The process of trying to imagine how each stakeholder may be affected is computationally expensive. This means it can benefit from formalization and quantified analysis via machines.

- Moral analysis can take place once there is a clear enough picture of each individual within the group of stakeholders. In other words if for example an AI or similar creates a model of a person based on learning how a person tends to react then perhaps simulations are possible based on that model. This is an open area of research as I haven't found anyone specifically studying moral amplification or moral analysis in this way.

References

Chandrasekhar, A. G., Kinnan, C., & Larreguy, H. (2014). Social networks as contract enforcement: Evidence from a lab experiment in the field (No. w20259). National Bureau of Economic Research.

Cruz, C., Keefer, P., & Labonne, J. (2016). Incumbent advantage, voter information and vote buying (No. IDB-WP-711). IDB Working Paper Series.