Building things is hard.

Doesn't matter what is being built.

Could be a business.

Could be a product.

Could be a logistics.

Could be a service.

Without experience with what one is building, many many mistakes are made along the way. If we don't know how big the thing needs to be and it's not big enough, there will be horrible bottlenecks that come into play that need to be fixed with sub-optimal patchwork solutions. If we build the thing too big, especially a business, there will be too much overhead cost, and the company will go bankrupt because there isn't enough demand for how much volume was designed to be pumped through the system (assuming the final product even gets finished).

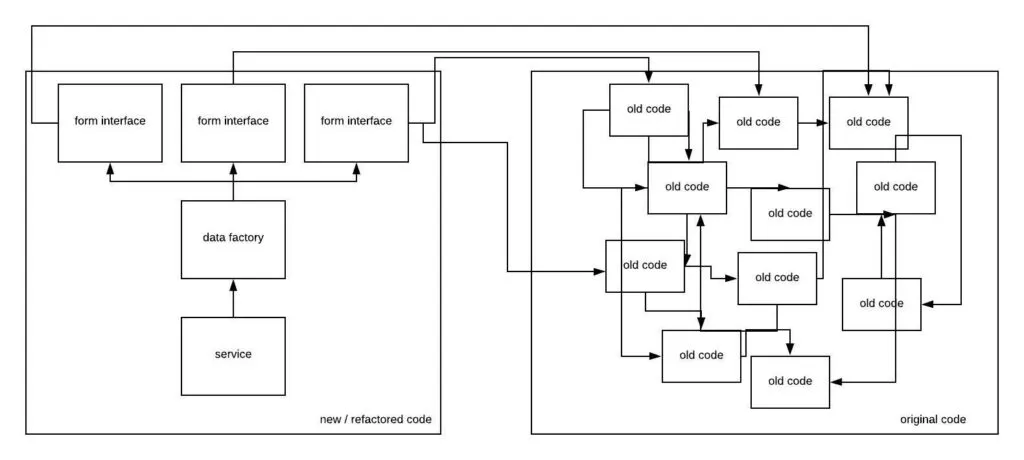

A small project often becomes a non-modular non-scalable solution just to get the proof-of-concept up running as quickly as possible. Then if the prototype has success, all the work that's been done thus far could not only be unhelpful, but also fully counter-productive. In certain situations the entire project should be scrapped and built back from the ground up, rather than constantly trying to run around patchworking problems over and over and over again very inefficiently. However, scrapping the project and starting over fresh is so difficult to do... and often impossible given the financial constraints in play and potential politics involved.

It seems no matter what, when it comes to building, we are surrounded by problems that need to be solved very carefully. Ironically, once again, if we spend to much time trying to plan things out, again we've wasted our time when instead we should have just been trying to crank out the prototype and refactor after field testing provides us with new valuable information. Expecting everything to work out like we planned without accounting for unknowns is... very risky and often downright foolish.

At the end of the day, being agile during the development process is very very difficult. There are strategies we can employ to ease the burden, but many of those are learned through painstaking experience and the mistakes made during the process of putting in thousands of hours into the thing we are mastering.

In the context of coding: modular is always better.

In fact, this is true for pretty much all open source development, including stuff like 3D-printing and real-world product.

If we can build a battery that powers dozens of other devices, isn't that the idea situation? Funny how that's the opposite of what corporations do. They don't want modular product. They don't want other people making tweeks to their work, to the point of lawyers sending cease and desist orders to anyone who dares defy their IP.

Clearly, there's so much room for improvement when it comes to collaborative development that it becomes obvious that humanity as a whole is operating at 1% efficiency or less. Everyone is running around doing their own thing, competing with one another at every turn within a technological landscape of abundance. It's insane how the world works today. In a few decades people will look back and laugh about how it could have ever worked this way. It's absurd on every level at this point. Tech has advanced exponentially, while society itself is still in the Dark Ages.

How to get there from here?

Very carefully I suppose. Again, modularity is key to the future. We must build modules that are contained and plug-and-play with other modules. We must give up the idea that intellectual property is valid. It is not. It can only exist when enforced by nation-states using threats of violence. That's not how the future is going to operate. We need to start transitioning today to be able to hit the ground running when it comes.

In terms of programming, it's best to try to contain code within a function that can be called multiple times, if possible. Even better, the greater program itself could be a module within multiple other programs. No sense in reinventing the wheel.

The concept of modularity is extremely important in development because it can be employed no matter how big the project is. Even if we have no idea how big the thing needs to scale, be it a prototype or full blown production: the use of reusable blueprints can not be overstated. The more the blueprint can be reused, the more modular and helpful it becomes.

What about flat-architecture?

This is a buzzword we hear a lot in crypto, and for good reason. Many of the best crypto projects are building out rather than up. Instead of building a pyramid of permissions and centralized efficient solutions, we are going the opposite route (rewiring the very foundations of money itself). We are building the base of a structure... a massive one, and we have no idea how high it will go.

During these early infant stages of crypto, we are building the ground floor on top of shifting sands. Anything that builds too high and becomes too centralized implodes immediately given a single bear market. We've seen this with our own eyes multiple times already. Hell, we are going through the ringer right now as I write these words. Bear market is great for flushing the garbage and making way for the legit product.

Does that mean crypto will eventually become centralized?

Likely, but the scale will be so much bigger than anything we see today, that it won't seem like it to anyone who was alive before everything scaled up. For example, try thinking on a Galactic scale. We only think about Earth because Earth is the only planet we've ever colonized. What if tech gets so insane that humanity colonizes a thousand planets? This is the level of scale that crypto changes the game by. The economy will get a thousand times bigger in a very short amount of time (relatively speaking of course). Trying to wrap our heads around the outcome is basically impossible. There are simply too many variables and too many Butterfly Effects in play. All I can promise is that it's going to be a wild ride.