The Sapir-Whorf Hypothesis, also known as linguistic relativity, is a defined by Wikipedia as "a principle suggesting that the structure of a language affects its speakers' worldview or cognition, and thus people's perceptions are relative to their spoken language." This theory, which is taught (or at least debated) in a number of fields, including anthropology, philosophy, and psychology, is usually discussed as having two different forms.

The strong version of the Sapir-Whorf hypothesis, linguistic determinism, has been largely abandoned since the middle of the last century. It posits that a person's native language determines the way that person thinks.

The weak version of the Sapir-Whorf hypothesis, for which there is some empirical evidence, posits that language influences the way a person thinks.

The "text" component of text-to-image AI models is usually Open AI's Clip model. Clip is supposed to be trained exclusively on English-language text: image descriptions, metadata, tags, and so on. However--and this isn't something I've really seen discussed--common words in other widely-used languages seem to have made their way into the dataset as well. Given the nature of the text data, it's not super surprising that this is the case. Due to this language "bleed-through" some rough image generation in non-English languages (and even non-Latin alphabets) with a large online speakerbase is possible using English-language Clip.

In my now-fairly-extensive work with text-to-image AI models, I've noticed that how Clip navigates prompts in these non-Latin alphabets pretty closely mimics the Sapir-Whorf hypotheses, especially the weak one. This is surely just an artifact of how the Clip model was trained, but I think it still bears pointing out. It's super fun to discuss how AI "thinks" in the context of already-well-established linguistic terminology.

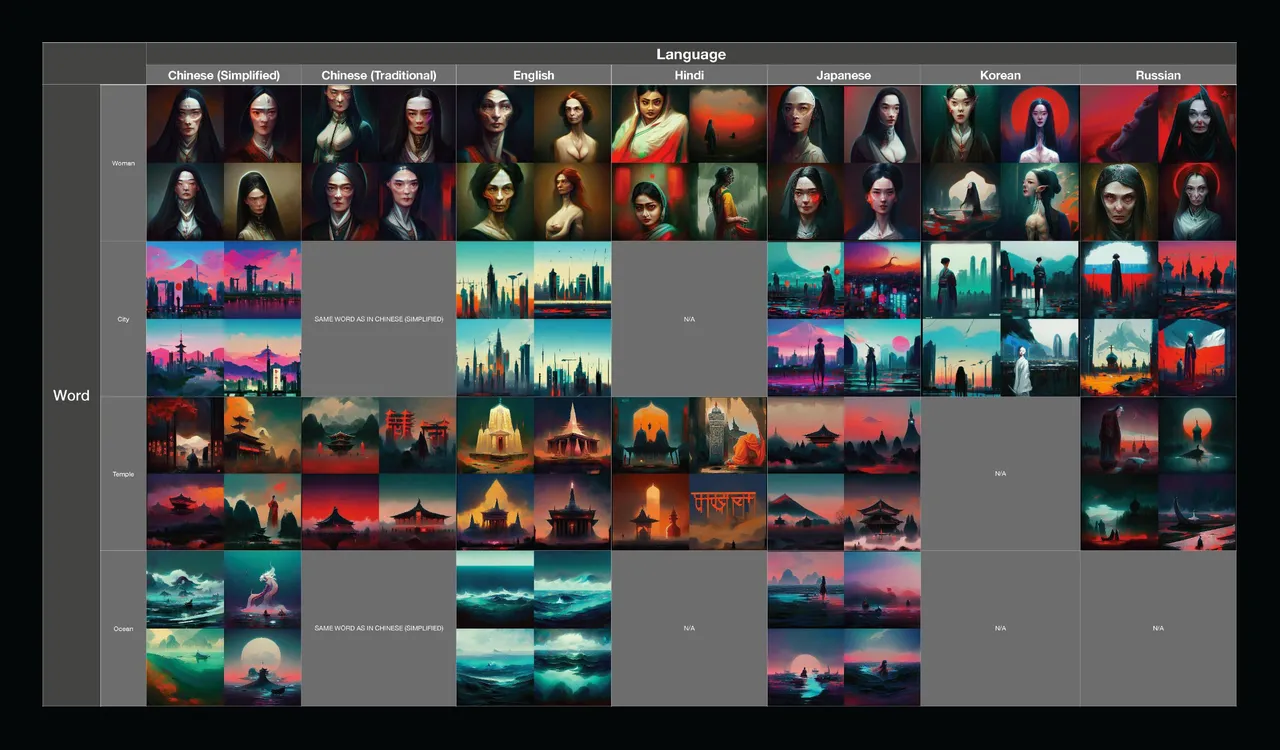

To be clear, by playing around with this concept, I'm not saying it follows that Sapir-Whorf accurately describes language’s influence on human cognition. I am most especially not arguing the validity of the ‘strong’ Sapir-Whorf hypothesis, which is pretty universally discredited these days. It can't be denied, though, that the exact same prompt in different languages and alphabets produce results that are accurate to the spirit of the word, but which reflect the "flavor" of the region and culture to which the language and alphabet belong.

To illustrate this point, I brought a chart!! All the images were made with Midjourney.

You can find a higher-res version here.

It will be really interesting to see if this continues to be the case if/when multilingual Clip models emerge on the scene. I'm betting it will--at least to some degree.

BONUS:

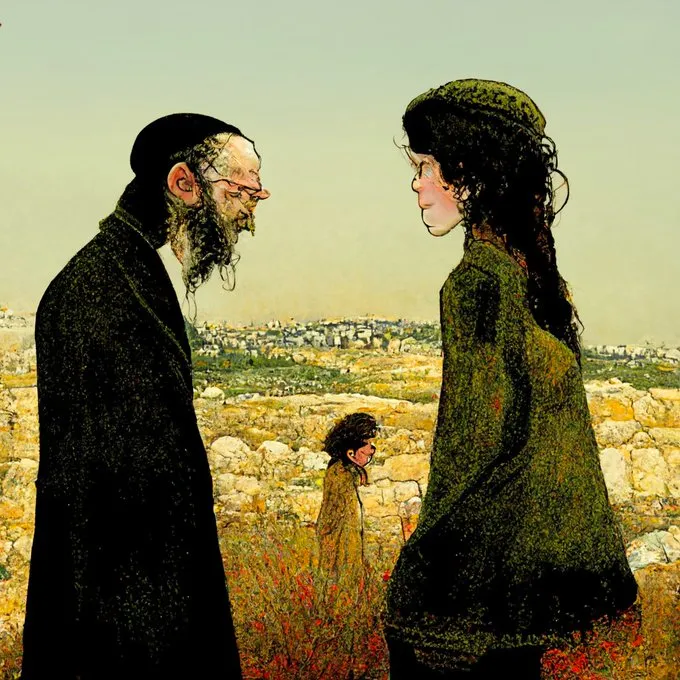

Hebrew is not a commonly-enough used language on the internet (or at least in the subsection Clip was trained on) for Clip to have a rough idea of Hebrew words' equivalent images. However, a prompt in Hebrew still generates images that appear much like Jewish art. People and iconography are often distinctively Jewish, there are lots of deserts, and the model even attempts Hebrew calligraphy at times—-though clearly doesn’t recognize the words it's being prompted with.

Here's some examples of this phenomenon, which also applies to other languages, such as Arabic and Urdu.

For a list of Google Colab notebooks that you can use to make your own AI art, see KaliYuga's Library of AI Google Colab Notebooks.