TLDR: no.

32GB servers with fast storage like SSD or NVMe are still good enough to run low memory nodes. The trick here is to keep the shared file in RAM (tmpfs device) and SWAP located on a fast disk(s). This solution was also described by @gtg in his post Steem Pressure #3 which I highly recommend you to read.

From the configuration standpoint, we only need to be sure that tmpfs and SWAP volumes have enough room to hold the shared file. Linux kernel will take care of the rest and start paging if it's required, in short, the paging is the process of optimizing memory by saving data on a hard drive instead of RAM.

My setup

By default on the Linux, the size of /dev/shm (tmpfs) is a half of the available RAM, 32GB servers will have tmpfs of 16GB. During my tests, the shared file already crossed the 33GB, so before I run steemd I need to remount /dev/shm with the bigger size, and I will use 48GB for it and 32GB for the SWAP.

# mount -o remount,size=48G /dev/shm/

How long does it take to replay the 91GB of blockchain?

The replay time highly depends on the hardware, so please don't stick too much to the numbers below, but I hope it gives you at least some kind of reference.

I used 4 dedicated servers for my tests,

| HDD configuration | Memory | CPU | Replay time (s) |

|---|---|---|---|

| 1xSSD / SAMSUNG MZ7LN256HMJP | 32 GB | Atom C2750 | 35708 |

| 2xSSD (RAID-0) / Samsung SSD 850 EVO 250GB | 64 GB | Xeon D-1540 | 12762 |

| 2xNVM (RAID-0) / INTEL SSDPE2MX450G7 | 32 GB | Xeon E3-1245 v6 | 10234 |

| 4xHDD (RAID-0) / WDC WD10EZEX-00BN5A0 | 32 GB | i7-4790 | 21456 |

| HDD configuration | Disk read speed | Disk write speed | Passmark | Passmark single core |

|---|---|---|---|---|

| 1xSSD / SAMSUNG MZ7LN256HMJP | 271.61 MB/sec | 2.0 MB/s | 3805 | 582 |

| 2xSSD (RAID-0) / Samsung SSD 850 EVO 250GB | 925.36 MB/sec | 900 kB/s | 10573 | 1344 |

| 2xNVM (RAID-0) / INTEL SSDPE2MX450G7 | 1.4 GB/s | 43.2 MB/s | 10410 | 2191 |

| 4xHDD (RAID-0) / WDC WD10EZEX-00BN5A0 | 678.43 MB/sec | 126 kB/s | 9998 | 2285 |

No doubt the winner is the server with NVMe disks, the full replay finished in 3 hours, but I was also very positively surprised by the performance of the server with 4 HDD drives.

All data below comes from the weakest server 1xSSD/Atom C2750 ;-)

Memory, I/O and disk utilization

dstat running during replay process (1xSSD/Atom C2750)

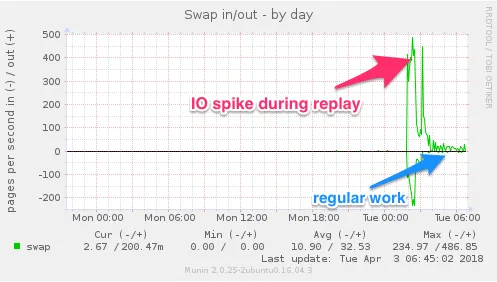

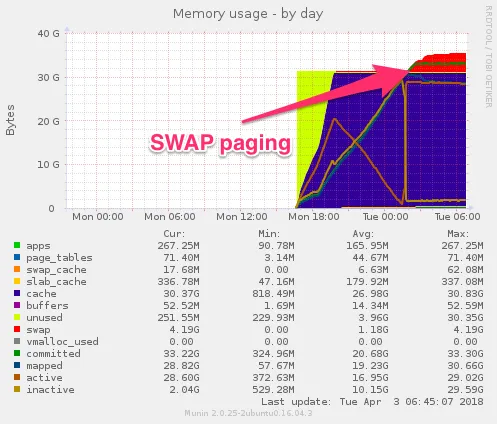

SWAP paging (1xSSD/Atom C2750)

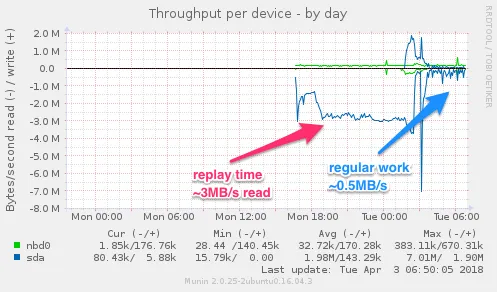

/dev/sda throughput (1xSSD/Atom C2750)

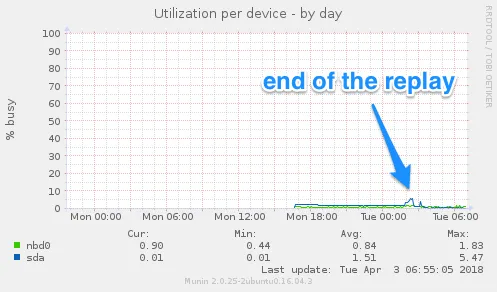

/dev/sda utilization (1xSSD/Atom C2750)

Beginning of the SWAP paging (1xSSD/Atom C2750)

$ free -m

total used free shared buff/cache available

Mem: 32094 329 305 29478 31459 1815

Swap: 30517 4288 26229

- during the replay, the READ/WRITE ratio is ~3:1 (read 91GB blockchain/write 33GB shared file)

- after the replay, the paging goes back to the minimal level

Conclusion

My biggest problem was to convince myself to let the servers use the SWAP memory because by many years I was learning how to optimize various systems, and SWAP paging was always something I don't want to see... but in this case I'm going to turn a blind eye and continue to use some of my 32GB nodes.

The data and graphs speak for themselves ;-)

If my contribution is valuable for you, please vote for me as witness.

May The Steem Be With You!