Introduction

One question that has been brewing in my mind over these years; judging from the astronomical growth being witnessed in technology; is this - "would the future of our species (the extant modern humans - Homo sapiens sapiens) be threatened by machines? This thought became a dread when I saw the movie "Terminator" (Judgment day and Rise of the machines), and how machines arose to exterminate the race of humankind. I was like; "holy shit!!, would machines evolve to be this self-aware as to fight the system that created them?" Okay the truth is, the "Judgment day" hasn't happened (yet), but what would be the future of humans with the emergence of super intelligent machines? Welcome again to the future of technology.

[Image Source: Maxpixel. CC0 Licensed]

AI evolves

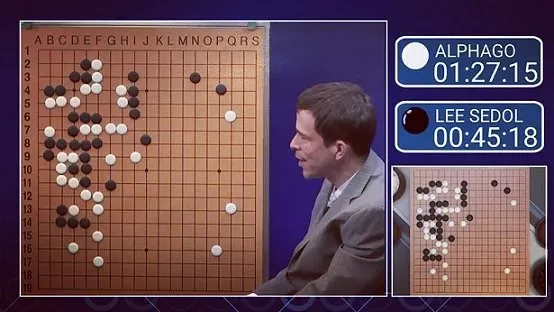

From the point the term AI was coined over 6 decades ago by John McCarthy [ref] - which started off as Reactive Machines, many advances have been witnessed. Talking of reactive machines; just like the name suggests, they are just some bunch of pre-programmed machines that are straight-jacketed to perform just a specific task, and may not even form a new memory through relational experiences. For example; the popular Google's DeepMind AlphaGo. Even after beating the human champion in straight wins, the damn AI didn't even know that "Go" is sub-categorized as a boardgame [ref] - no wonder it been said that "commons sense is actually not as common as it appears" - definitely, these AIs lack common sense and the basic human intelligence; they are just reactive by function and application.

No doubt, these reactive machines (which is the Artificial Narrow Intelligence) do not pose any serious threat to our existence. At least they lack self-awareness, but that's not where we're focusing on, but on the advances that have arisen from the reactive machines. Moving a step from the reactive machines, another type of AI arose; which can be looked upon as the Limited Memory AI. Unlike the "dumbass" reactive machines, this AI can relate to the present event and form a perception that can create an entirely new memory, and can also make inference from the pre-existing memory from the database. The example of this is the advanced car collision detection system that is fitted into the self-driving cars, another example is your personal office and home virtual assistants (like the Siri).

But does this pose any threat to us? Okay try asking Siri this question and know if it is wise enough to grab the logic behind it - "HI Siri, if you light a candle from the three edges, will the flame tilt towards the vertical or horizontal side?" Even the dumbest humans would know that candles don't have 3 edges, and you can only light just the top, but your Siri wouldn't know all these; so should we be concerned about the Limited Memory AI? Certainly not.

We've seen two subcategories of AI and we're not even feeling threatened a bit yet; but hey!! There are two more to go; and one of them being the Artificial General Intelligence (AGI) - just like the name "general" suggests, this AI can function in a broader capacity than the earlier seen AIs - though some have inferred that the AGI is an enhanced version of the Limited Memory AI, but yes! this AI has the capacity of functioning in more than just a stipulated and pre-programmed task, and they can also form their own memory. No wonder Dr Ray Kurzweil often refers to this as the "strong AI" - which; on viewing holistically; is closer to the fulfillment of the definition of AI.

Now some may ask; has any AI absolutely fulfilled the definition of what AI is? Remember; "AI" seeks to replicate (or rather, simulate) the human-level of cognition and intelligence in machines. And this intelligence covers both common sense, and even the other domains; like the efferent domain (which has to do with emotions, mood, and even consciousness), then the psychomotor domain. But as it were, the major concentration has been on the cognitive domain - and that is why we have seen machines that would beat human champions in games, but would suck at common sense.

[AlphaGo Vs Human. Source Flickr. Author: Buster Benson. CC BY-SA 2.0 Licensed]

But should we feel threatened by the "Strong AI"? Well, for the reason that they are in the borderline to the actualization of a true AI, we should keep an eye on them. But that is not actually where the major threat lies, but in the next generation of AI - the Artificial Super Intelligence.

Behold the threat

Remember we said that the efferent domain has been lacking in the earlier discussed AIs, but these qualities that are lacking have been sought out to be incorporated in machines. Imagine when consciousness, emotions, and self-awareness get incorporated in machines, what do we hope to see? Do you remember one of the predictions that was made by a very renowned futurist; Ian Pearson:

Artificial Intelligence (talking about the ASI) would be many times smarter than humans - Ian Pearson [Source]

I'm sure you know he was not referring to the reactive machines when he made that statement. Now some may ask; "do we have the ASI yet?" Well, that's a little bit of a comfy there; at the moment, it is only theorized, but this doesn't infer that it isn't realizable in the not-so-distant future. As a matter of precision, attempts are being made here and there in that regards. Like we saw in the case of Sophia (the advanced humanoid robot that was granted citizen ref) - though Sophia is nowhere near the ASI, but it is a step in the right direction.

[Sophia the Robot. Image Source: Wikimedia Commons. Author: MSC. CC BY 3.0 DE Licensed]

Now the question is; how do we hope to still maintain our supremacy as the superior being when something more intelligent than us arises? Or how do we even hope to control these AIs? But again, looking at this holistically; we should not only focus on the "threat" aspect of it. Consider these facts: The amount of data being generated in this era is on the Exascale, and the humans' cognition is somewhat limited to undertake all these data analysis with precision and accuracy. But let's look at this; a particular Cray Supercomputer called the Tianhe-2 (Milkyway-2) has the insane processing ability and power to perform a whopping 33quadrillion calculations in just one second [ref], can you beat that? Imagine when these processing power get incorporated into AIs, wouldn't that simplify the future of data processing and analysis. Not to mention other Cray supercomputing beasts that have arisen (like the K-computer).

Evolution in AI != Evolution in humans

It has been postulated that we are actually evolving alongside these machines, and even our devices. No wonder; according to this report; the human evolution has been inferred to be massively sped up in the last couple of decades, and this could be remotely linked to the evolution in technology (of which AI forms a major driving force). But the speed at which AIs are evolving has been seen to supersede the rate at which our species are evolving. For example; few decades before now, one of the fastest computers would probably run just a few number of calculations in one second. Then compare this to the Tianhe-2 (within just few years; massive complexities have arrived in the computing world).

With the speed at which machines (and AI) are evolving, it is only logical to infer that, it won't take long, and they would leave the human species behind; but what would be our hope in maintaining relevance? Can I shock you? Before, it was our absolute prerogative to program these AI, but just recently, a major breakthrough was achieved with the creation of an Artificial Intelligence than can code and program other Artificial Intelligence [ref] - it seems that there could be a paradigm shift from humans to AI, and they would take the wheel in creating their own codes - so how do we hope to stand against something that would evolve to become self-aware? Maybe the prediction of Elon Musk may lead us to the right answer for this:

Humans would need to merge with machines to protect the human race - Elon Musk

[Image Source: Flickr. Author: Gerd Leonhard. CC BY-SA 2.0 Licensed]

How weird; technology that was initially seen as an aid to mankind is now dictating the pace for us. And this could be what Dr Ray Kurzweil meant when he speculated that the ultimate destination of these advancements would be the "Technological Singularity" - when the info of the entire human population would be collated and processed in apposition to the absolute computational powers of all the devices and machines in the world. And the truth is; this process has already been initiated. Maybe we should just brace up for what's coming.

Conclusion

The emergence of Artificial Intelligence has been one of man's greatest attempts to simulate the human-level of cognition in machines, but this has not left the fate of humankind without obvious threats like we've seen - and one of them being that they might out-perform us in tasks that were exclusively preserved for us. Well, the question is: Is tech still meant to assist us or side-line us?

Thanks for reading

References for further reading:

- Towardsdatascience - Advances in Artificial General Intelligence

- Human-machine merger - Pathway to singularity

- Computational_intelligence.pdf

- Self-awareness in machines - Computer Consciousness

- Technological Singularity and Superintelligent machines

All images are CC Licensed and are linked to their sources

gif by @foundation