Repository

https://github.com/utopian-io/utopian-bot

Introduction

Today there was an unusually long delay of @utopian-io voting after 100% VP. I reported it immediately on Discord, and @elear seemed to fix the server problem after @amosbastian contacted him. I'd appreciate both of you for developing and maintaining Utopian bot. (Hope @elear also see my previous suggestion on Utopian voting priorities: Utopian bot sorting criteria improvement to prevent no voting.)

Since I started using Utopian about 2 months ago, I'd never been interested in the Utopian bot. But the recent downtime of steem-ua voting for Utopian posts led me to look into the Utopian bot too. And today's long delay made me wonder what are usual delays after 100% VP. So here is the analysis.

Scope

- Utopian bot delay and downtime after 100 % VP

- delay: usual time between the first voting and 100% VP

- downtime: unusually long delay (i.e., network/server/etc problem)

- Data: 2018-11-05 - 2019-02-05 (3 months). Based on Developing the new Utopian bot

and the communication with amosbastian, the new bot seems to be used from about 3 months ago.

Actually I was already able to guess what's mean or median time of the delay (see theoretical analysis section), I would like to double check, and I also wanted to check how long is downtime and how frequently they occur.

Results

Theoretical analysis of the delay - Law of large numbers (LNN)

Well, for the delay, I already knew that the average delay would be 2.5 min + alpha based on the code and description on the github.

https://github.com/utopian-io/utopian-bot/blob/master/utopian_bot/upvote_bot.py#L818-L819

- Bot just exits when VP isn't 100% yet.

- Bot is called every 5 minutes by cronjob.

While 5 minutes is an example, we can easily guess that's the value that is actually used :) And it turns out to be true :)

Thus, by the Law of large numbers, the average delay should be 2.5 minutes :) So simple.

But actually there is a further delay due to the preparation steps: fetching comments/contributions and prepared init_comments and init_contributions up to L#826)

The actual voting starts from handle_comments. I was actually curious about it (I don't know why), so I cloned the code, and figured out it only takes about 5-6 seconds on my laptop, which is negligible.

So the average normal delay should be 2.5x minutes. For the longer delay, i.e., downtime, we need to see the data.

Data Analysis

Calculation of Voting Power

There is no information about voting power given time. So it should be calculated and that was the first nontrivial job needed. Due to the code, it's so clear that Utopian bot starts only after VP is full. So we know the time when it's full. So picked 2018-11-05 22:15:15 as the start time.

VP calculation (See the code for the details)

- For each voting, VP should decrease proportional to the weight

- But, also need to consider VP recovery.

Well, actually there are other factors that can affect VP, e.g., powerup, powerdown, delegation. But examining the data, there was no noticeable events. So ignore them. In particular, the witness reward is negligible. 8400 STEEMs per month, but as you know, Utopian SP is more than 3 millions. So for a short period of time, the effect of powerup due to witness reward is negligible. Likewise, the powerdown amount was also negligible.

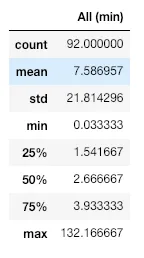

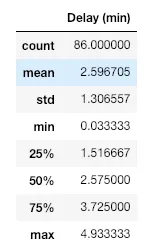

Summary Statistics

Intepretation

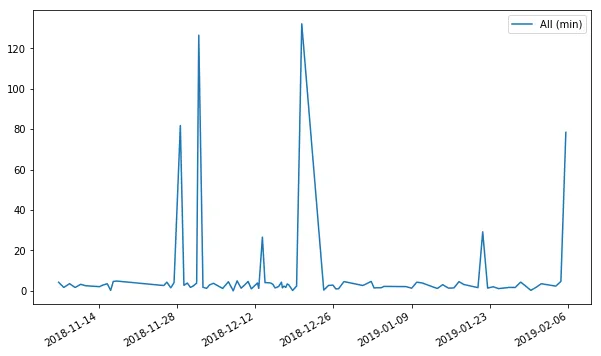

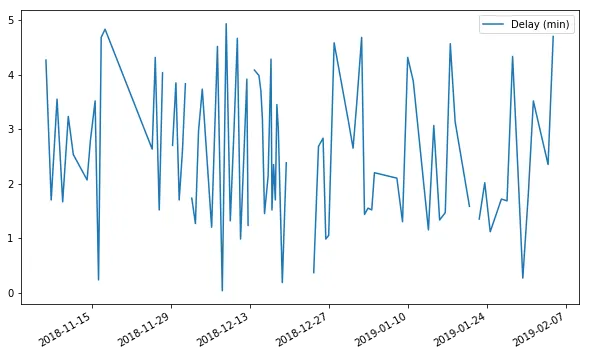

For 3 months, there were 93 (so total 92 full VP) voting rounds, the mean delay was 7.59 minutes. Of course, this is due to longer downtime. If we only consider < 5 mins, the mean delay is actually 2.60 minutes, which is exactly what the theory expected :) 2.5 mins + 6 seconds! It's scary, LLN works :) It can also be shown in the following graph, which looks quite random around 2.5 mins.

Update: Based on @amosbastian's comment

".... I am pretty sure Elear set the cron job to run every minute instead of every 5 minutes...."

, I also add the following, which is the recent voting start time.

2019-02-05 16:15:09

2019-02-04 17:55:09

2019-02-03 20:00:09

2019-02-02 22:05:12

2019-02-02 12:55:54

2019-02-02 01:15:15

2019-02-01 05:25:15

2019-01-31 08:05:12

2019-01-30 08:30:12

2019-01-29 12:05:09

2019-01-28 13:05:09

2019-01-27 13:25:09

2019-01-26 13:30:09

2019-01-25 13:50:09

2019-01-24 13:55:09

2019-01-23 14:35:09

2019-01-22 15:05:12

This may be impossible if the cronjob is run every minute (it might also be dangerous since it may incur dup instances, see details in my comment to amosbastian). I think these pattern clearly shows that it's run every 5 minutes. (and again by powerful LLN :)

reverse engineering

One may think this LLN analysis may look too simple, but this shows an important lesson that you can actually do reverse engineering from the pattern. Even without knowing the fact that it may be run by cronjob, from the data above, we can guess they're scheduled every 5 minutes.

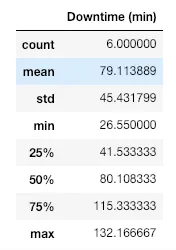

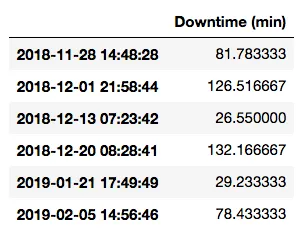

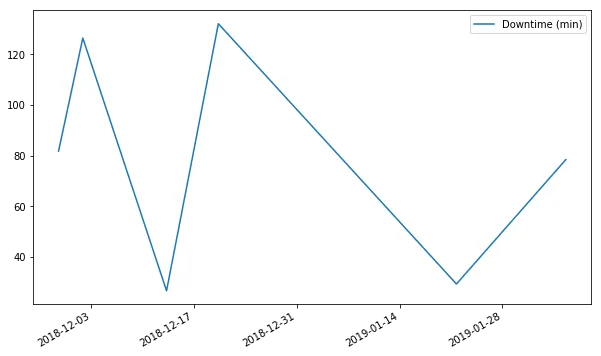

6 downtimes in 3 months might be a little bit often

There were total 6 downtimes, and the mean or median is about 80 minutes, i.e., one hour and 20 minutes.

Once down, it takes long to be recovered. I guess this might be due to no monitoring system? But I don't know if there is.

I have my own two bots (@gomdory, similar to dustsweeper but free to encourage communications (since it's free only serviced in limited community though due to limited resource). @ulockblock, curation bot) but they are very stable. virtually no down for much more than 3 months. (my cheap VPSserver was down once.)

I haven't looked into the code in details, so I'm not so sure if it's whether due to the bot or the server. But I don't think the downtime is due to the bot code. Maybe there is a possibility data fetching error? Other than that, I think it's due to external factors on the server. But then still, although the sample size is too small, 6 downs in 3 months seems too often. Which hosting service is that? I should avoid it :)

Maybe some downtime might be due to scheduled maintenance, but I don't know.

Update: Based on @amosbastian's comment (Thanks a lot for your comment!)

"... Also, don't think the droplet Elear is hosting it on has any problems, or that he actually did anything to fix the problem today - the delays were mostly caused by nodes not working properly, me fucking up something in the code, or someone messing up the spreadsheet we use, which in turn messes with the database and the bot. Honestly I am surprised that there were only 6 in the last 3 months, as I recall there being more lol."

As I replied, if downtime was more frequent, then I think fortunately they happened while VP wasn't full yet.

What about removing 2.5 min average delay?

For the usual 2.5 min delay, I understand that the current approach is simple, but we can make the code such that the bot sleeps only until VP is just full (of course, all preparation job should be done before to save time further, but this turns out to be very negligible) I believe most bots starting voting when it's full work this way. Of course, it also needs duplicate instance prevention, but it can be done easily. My bot is also operated this way.

Conclusion

- If voting bot can be operated more stably, we can have 2 more rounds of votings :)

- The reasons of long downtime are worth to be investigated.

- Even without the delay, there is a room for improvement. I don't want to exaggerate :) It's about 16 hours per year, so about 0.7 more round :)

- To be honest, the bot itself is quite stable. Thanks a lot @amosbastian!

Tools and Scripts

- I used @steemsql to get the voting data, but it can also be done with other library (e.g., python, account_history) since this isn't a large data set.

- Here is the Python script that I made for the analysis.

import pypyodbc

from pprint import pprint

from datetime import datetime, timedelta

import pandas as pd

import numpy as np

import json

import matplotlib.pyplot as plt

connection = pypyodbc.connect(strings for connection)

SQLCommand = '''

SELECT timestamp, weight

FROM TxVotes

WHERE voter = 'utopian-io' AND timestamp > '2018-11-05 10:0'

ORDER BY timestamp ASC

'''

df = pd.read_sql(SQLCommand, connection, index_col='timestamp')

num_data = len(df)

ts = df.index.values

weight = df['weight'].values

vp_before = [0] * num_data

vp_after = [0] * num_data

full_start = []

full_duration = []

for i in range(len(df)):

if i == 0:

vp_before[i] = 100

else:

vp_raw = vp_after[i-1] + (ts[i] - ts[i-1]) / np.timedelta64(1, 's') * 100 / STEEM_VOTING_MANA_REGENERATION_SECONDS

if vp_raw > 100:

vp_before[i] = 100

seconds_to_full = int((100 - vp_after[i-1]) * STEEM_VOTING_MANA_REGENERATION_SECONDS / 100)

dt_full = ts[i-1] + np.timedelta64(seconds_to_full, 's')

full_start.append(dt_full)

full_duration.append((ts[i] - dt_full) / np.timedelta64(1, 's'))

#print('%s: %.2f min' % (full_start[-1], full_duration[-1] / 60))

else:

vp_before[i] = vp_raw

vp_after[i] = vp_before[i] * (1 - weight[i] / 10000 * 0.02)

#print('%.2f -> %.2f -> %.2f' % (vp_before[i], weight[i]/100, vp_after[i]))

Relevant Links and Resources

- Developing the new Utopian bot

by @amosbastian - Utopian bot sorting criteria improvement to prevent no voting.) by @blockchainstudio

- Busy - 3 new features and 2 bug fixes - powerdown information, zero payout, 3-digit precision, etc

- my dev post gone without voting

- eSteem Surfer - powerdown information with the correct effective SP, search to eSteem toggle, etc.

- my another dev post also in danger