I am reviewing a Gigabyte GTX 1060 6GB with Samsung Memory. Most people only like the Samsung memory because it is faster than Hynix memory when mining Ethereum. While that might be true, for just about every other mining algorithm it does just as well. I will be doing a side by side Hynix/Samsung comparison in another post found here.

https://steemit.com/nvidia/@mhunley/nvidia-1060-3gb-samsung-vs-hynix-memory-mining-comparison

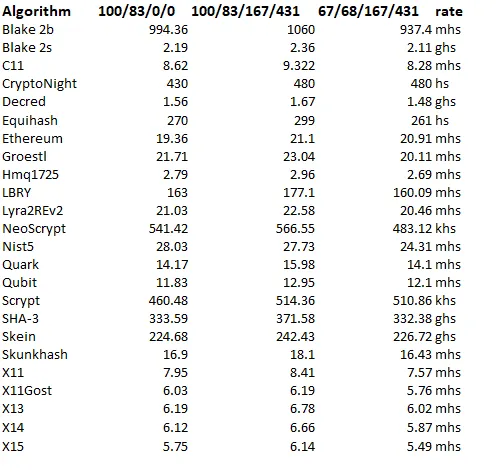

I ran a benchmark on the card using stock out of the box settings, overclocked settings, and what I like to call tuned settings. The stock out of the box settings consist of a power limit at 100%, temperature limit at 83C, core clock at +0, and memory clock at +0. The overclocked settings consist of a power limit at 100%, temperature limit at 83C, core clock at +167Mhz, and memory clock at +431MHz. These are overclocked values that I found to work great all day long without fear of freezing or locking up.

While the stock card settings get great hash rates straight out of the box, you can mine more efficiently by lowering the power and temperature settings using MSI's afterburner overclock utility. I found that by setting my power limit at 65%, temperature limit to 67C, core clock at +167Mhz, and the memory clock at +431Mhz, the card would run cool and still get very good hash rates. I have been running a rig consisting of 6 cards using these settings for over 4 months now and the cards are barely warm.

The column headings below are as follows.

Power limit%/temperature limit/core overclock/memory overclock