In this post we will look at a fancy AI called reservoir computing. We will just be looking at the general concept. In a future post I will show you an implementation of this fancy neural net.

By now we probably all have played around with AIs like ChatGPT, DALLE. But how do you actually create an AI. The majority of AIs are neural net based training these neural nets to generate text or images is a computationally intensive task. A neural net is like a very general model with many possible configurations. The vast majority of these configurations are nonsensical. So the game is to somehow find a model which performs well on the task you want it to do. That is not to say that selecting a neural net is an easy problem. Indeed there are two problems, selecting or building a neural net and then training the neural net to perform well on the task.

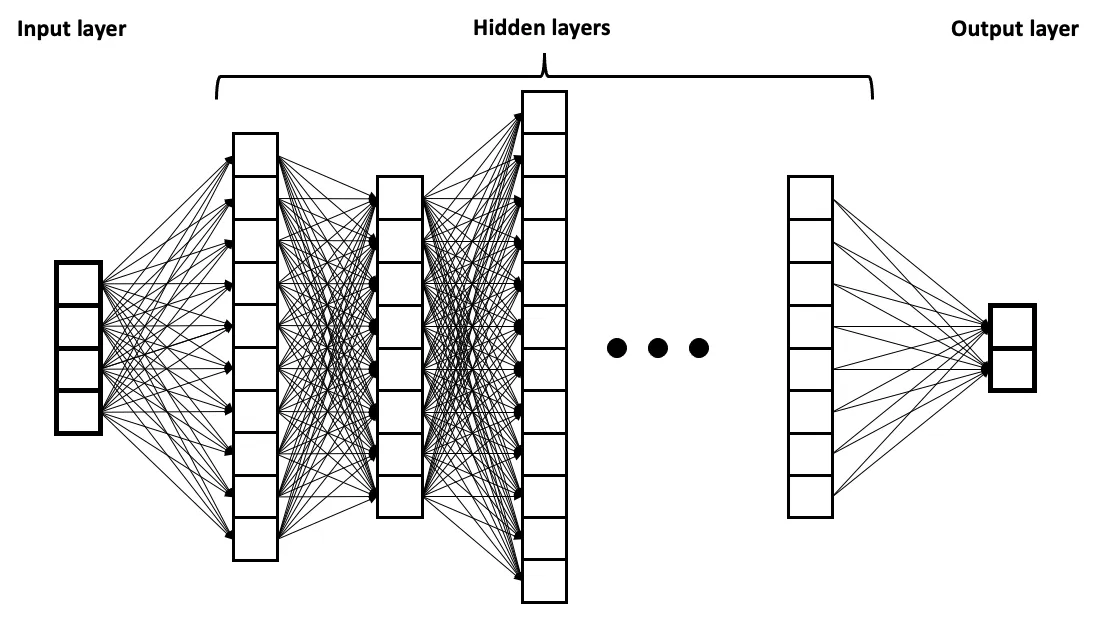

The majority of neural nets have some kind of nested structure where the input information gets transformed many times before it ends up at an output. Here input can be read for example as the question you ask ChatGPT and output as the text it generates. At each transformation there are parameters which need to be suitably chosen when finding a good model for the task. Due to this nested structure finding good parameters is a heavy computational task.

The trainable components are sequentially stacked in connected layers

CC Attribution share alike 4.0

Simple image classification like telling cats apart from dogs you can do on a home computer but for the real-world tasks you need something much bigger. Nowadays we are at a stage where complicated models can only be deployed by big institutions or companies as even universities do not have the resources to get the necessary equipment.

Hence, effort has been made to develop methods which can deploy networks with less computational resources. The first thing that comes to mind is the unnatural way we try to find the optimized model. The computations that we do on a computer are not really the exact computations we need to perform. The operations are not hard encoded in the hardware. Consequently, it seems fruitful to develop hardware that exactly does the computations that are needed to find the optimal parameters.

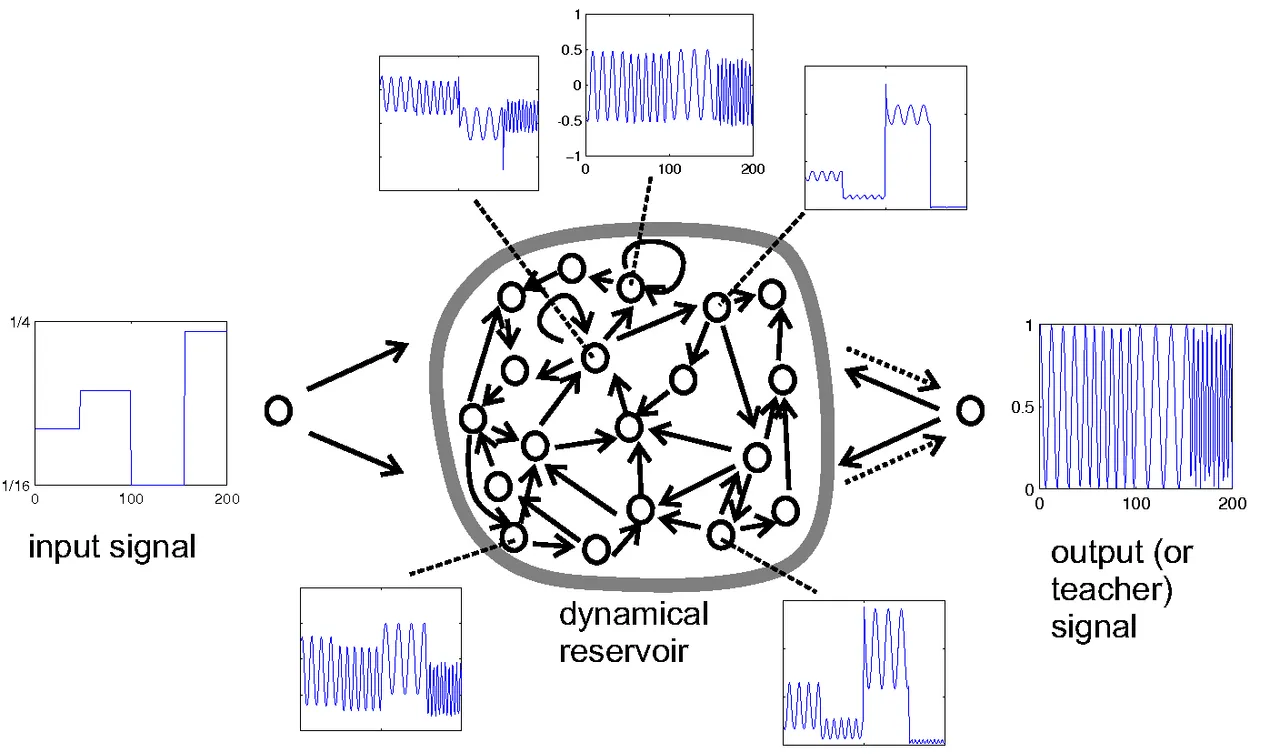

A completely out of the box approach to this problem is reservoir computing. Remember how neural nets are generally a kind of nested transformation. This is because this nestedness introduces some nonlinearity in the system. This nonlinearity is needed because difficult tasks are generally not linear. So we could make the assumption that nonlinearity is much more important than these trainable parameters within these nested transformation. This leads us to the general framework for reservoir computing. We have the input information flowing into a reservoir of nonlinear process without trainable components which flows into a few trainable parameters which then give the output.

The interesting thing about the reservoir is that real physical systems can be reservoirs as long as they are in a sense nonlinear enough. For example buckets of water under suitable conditions can be a reservoir as the underlying motion is nonlinear enough. There are of course many caveats here. Nonlinear enough is definitely not satisfied by any system and I think it is unlikely that this framework will work for very complicated tasks like the ones I was talking about in the beginning. It is generally a good framework where the process is simple enough that implementing it on a computer is overkill.

The reservoir looks like a chaotic mess in comparison to the previous neural net

By Herbert Jaeger CC BY-SA 3.0

That's all for now. In a future post we will look at an implementation and uncover the underlying dynamics of the reservoir.

Reference: Nakajima, Kohei. "Physical reservoir computing—an introductory perspective." Japanese Journal of Applied Physics 59.6 (2020): 060501.

My cat didn't like snow enough to take a photo tax