You have used ChatGPT many times by now I am sure, but do you know what it is and how it works?

The other day I talked about how AI is just a tool and sometimes it can be quite foolish. In that post, I touched upon what ChatGPT is behind the scenes, a highly trained auto correct, or more specifically a text prediction engine.

Would you like to know how it does it though, from a very simplified point of view?

At it's core, ChatGPT is a neural network. A system that was developed based on how the human brain works. A neural network consists of multiple types of layers. In it's simplest form, an input layer, one or more hidden layers, and an output layer. In reality though, there are a lot more to the neural network than these three layers, but let's keep it real simple.

The input layer is what accepts your request. It takes your request and breaks it down to individual tokens. As I explained in my last post, a token could be a word, a part of a word, or even multiple words like "New York". A token is basically the breakdown of text into the smallest meaningful chunk. The output usually is slightly more than the amount of words in the input. The rule of thumb is 750 words will end up being roughly 1,000 tokens.

This input layer is then fed into numerous hidden layers, these hidden layers make choices based on formulas but in it's simplest form, a percentage of it likely being this or that. For each input token, it will send it through the input layer and then all the hidden layers. Previous tokens are sent along with the current token so the next layer can make a decision with context. Finally it is sent to the output layer, this layer represents the final prediction.

This is a gross simplification of the process, and there is some other steps in the process like mapping the tokens to embedding. Let me show you an example of what we talked about though.

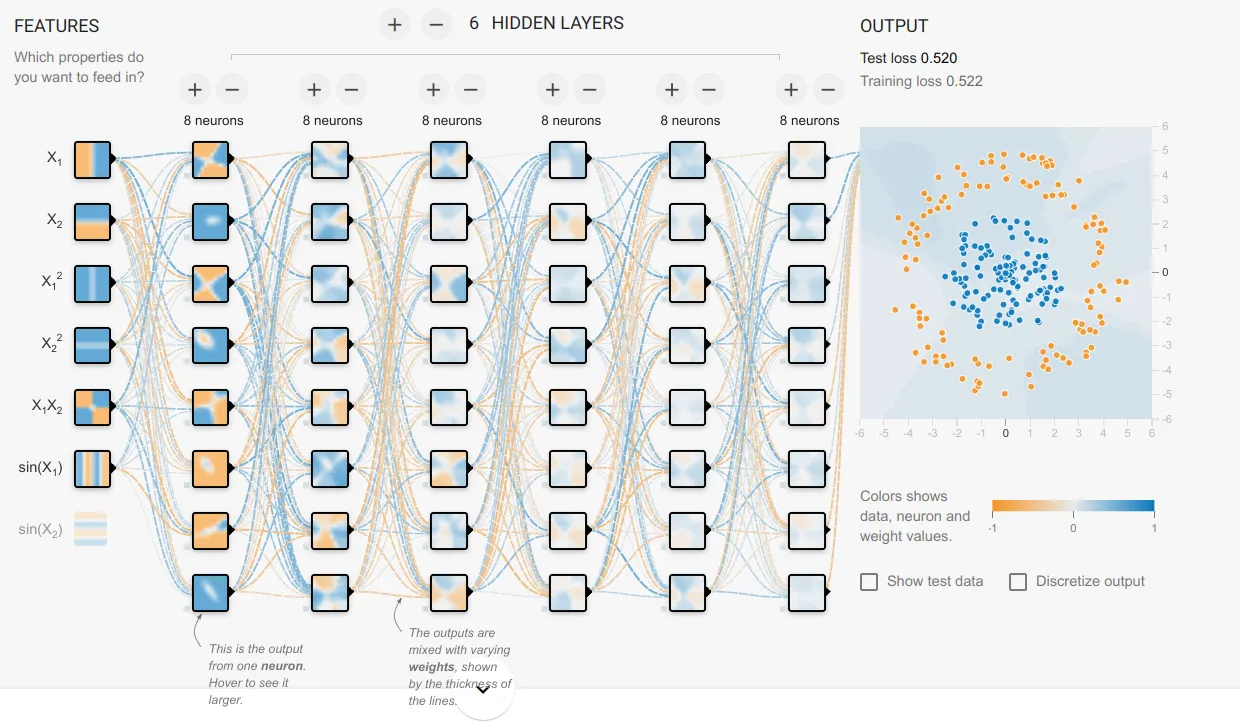

This is a very simple neural network with 6 inputs in the input layer, 6 hidden layers, and an output layer which isn't directly shown. As you can see the input is fed data which then goes through all the neurons in the hidden layers to come up with a final decision on the output. If you look between the neurons you will see colored lines, these are what is called weights. A number from 0 - 1 to represent how likely this neuron will follow that previous neuron. During training, these weights are adjusted frequently until the output meets acceptable results. These weights are then saved and becomes "the model".

Check out this simple neural network example on Tensorflow's Playground.

In this example, you can customize the training rate, how many layers, and the activation function. Hit the play button so you can see how it works. Keep in mind, this is an extremely simple example of a neural network. A model such as ChatGPT has many more layers to it.

I highly recommend you check out this LLM visualizer. Click on ChatGPT 3 and zoom around to get a feel for how massive this is. Keep in mind, ChatGPT 3 is tiny compared to ChatGPT 4.

After a model is trained, the weights are used for what is called inference. This is the process of using a model to solve a problem and output a result.

With ChatGPT 3, you are looking at nearly 100 layers with a depth of almost 13,000 per layer. With the newer versions of ChatGPT even larger. For ChatGPT, and other large language models to get better, they need to be trained on more data, use better techniques, and better fine tuning of their weights. This process is very expensive and time consuming. The original ChatGPT 3 cost around $3,200,000 to train, with ChatGPT costing upwards of $100,000,000 to train. WIth ChatGPT 5 around the corner, the cost to train may exceed $1,000,000,000.

Fun fact, ChatGPT 3 was trained on 45 Terabytes of compressed data, which was later filtered down to around 570GB. ChatGPT 4 was trained on roughly 13 trillion tokens, or over 10 trillion words.