The question from title showed up many times recently. If you know the Betteridge's law of headlines then you'd expect the answer to be "no".

Well, not this time :o)

At least the answer is not that simple and it will greatly depend on what you do and - more importantly - when.

Before we start. If you are interested in the topic of upcoming RC changes, you've probably seen this post by @howo. I find the units used on hiveblocks with M misleading, since they are recalculated from raw values (it would be more close to reality if M was replaced with HP, because that's what it really is). I'm going to use raw values in graphs and examples below (where M/G will mean million/billion in raw units).

It is not 77 of 88 million RC by the way, but 77920 of 88390 HP equivalent in RC - the comma is a thousands separator.

If I knew HF26 will be postponed so much, I'd have planned the changes in RC differently and maybe think about some better way of addressing the same problem RC was designed to solve. As it is now, the changes are rather conservative.

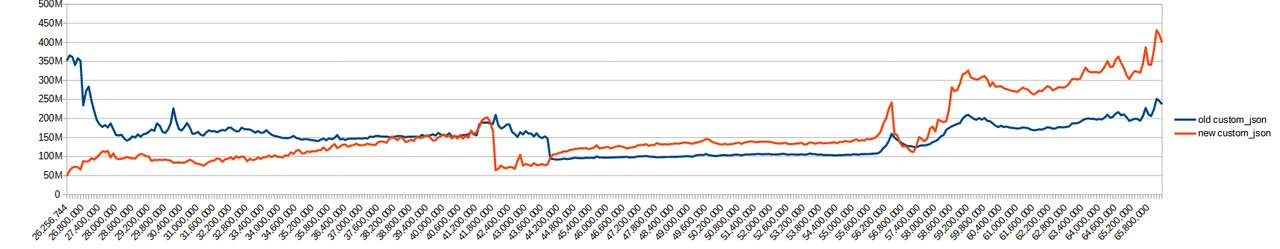

Change in amount of daily transactions over time. We were at 59M blocks when I started RC analysis - temporary increase turned out to be long lasting phenomenon.

The only change that could be considered revolutionary is the direct RC delegation mechanism implemented by Howo and described in article linked above. It might turn out to be revolutionary because it opens a lot more opportunities to utilize now idle RC. For example it gives wealthy accounts an alternative to burning their RC for new account tokens and that is big. New account tokens are exceptionally expensive. Over 98% of all RC used in the past was used to finance those tokens. A single token is equivalent of 4600 average comments or 39500 average custom_jsons.

So far the only way for small accounts to get some RC from accounts that have too much of it was to get vesting delegations. That mechanism can be abused far easier than new RC delegations, so it requires greater scrutiny from delegators, it also requires them to give up portion of their power, which means vesting delegations are not really cheap. But the same people won't care about RC nearly as much, since they can't use it all anyway. We can expect vesting delegations to start being replaced by more and bigger RC delegations. That might turn out to be a bad thing though. RC delegations will be cheaper, which increases profits from operating bots. I'll get to potential dangers of the mechanism later.

Resources

Quick reminder. Each transaction consumes some resources from up to 5 different pools. These are:

- history bytes - consumed by every operation and transaction itself; represents network bandwidth, block log storage, AH storage and now also HAF storage

- new account tokens - a luxury item among resources, very expensive but only used by one operation

- market bytes - consumed by market operations; it represents extra cost related to exchanges having to collect those operations on their local AH nodes

- state bytes - consumed by some operations (and a tiny bit by transaction) that need to store new data

- execution time - consumed by all operations and transaction itself

Price of each resource depends on many factors:

- global regeneration rate

- fill level of resource pool

- cost curve parameters (constant)

- (new) resource popularity share

Resource pools are governed by two sets of parameters. The second one mentioned above defines cost. The first set translates to sort of "allowance" for each block. Block-budget is an important derived unit that not only allows comparison between old and new version of RC params, but also to compare different pools. Processing of each block is expected to consume certain amount of resources. If more than that is used, the missing portion is taken from the pools, if not all are used, the excess is added to the pools. But to prevent accumulation of resources over time, the pools also have "half-life". A set percentage of the pools decays with each block. If no resource is used for a long time, after a while pool fills up, fixed value block-budget and percentage based decay become equal putting the pool in the state of equilibrium - when that happens we have full pool. Each pace of consumption equalizes at different level. The lower the level, the more that resource costs - how much more is defined by cost curve parameters.

What changed?

It was obvious that after HF24 optimizations the values of resources consumed for state bytes and execution time were not reflecting reality. On top of that some operations were not reporting proper consumption even for old standards. A lot of significant work was not accounted for in execution time consumption. Those two resource pools were main subject of interest.

When I started analyzing what needs to be updated in RC, I hoped there are set of objective rules that would tell how to make adjustments.

There are none.

So I tried to make it so that at least amounts of consumed resources would be easy to understand.

State bytes.

Values of state byte consumption are now expressed in hour-bytes. One byte of RAM held for one hour is the basic unit. The state objects use RAM not just with the object itself but also with index nodes - this is now reflected. A lot of objects have well defined lifetime. F.e. comment_cashout_object is created on comment_operation and lives for exactly 7 days.

When there are many potential lifetimes, the longest is taken into account. F.e. account_recovery_request_object is created when agent initiates account recovery. It lives at most HIVE_ACCOUNT_RECOVERY_REQUEST_EXPIRATION_PERIOD (one day) which is used to calculate memory consumption, but might be removed earlier with recover_account_operation.

Some objects, once created, live forever, f.e. account_object. If "forever" was applied directly, consumption of hour-bytes would always be infinite. Therefore I've added an assumption that average memory required to run the node will double after 5 years, so all the memory consumed can be considered "covered" after 5 years. Therefore "forever" means 5 years of consumption - it is totally arbitrary value.

Finally some objects have no definite lifetime (so potentially forever), but can be removed with explicit operation, f.e. vesting_delegation_object created when VESTs are delegated with delegate_vesting_shares_operation. Such objects are assumed to last for "half forever", that is 2.5 years - again, totally arbitrary value.

Result of above change is that operations that create temporary objects consume far less state byte resource than operations that create permanent or long lasting objects. Previously the difference was far smaller. The difference is reflected in final cost. RC cost of operations such as account_create_operation greatly increased in comparison to previous version (consumption increased over 6 times). On the other hand cost associated with state byte consumption of operations that only create temporary objects, like vote_operation, decreased considerably (consumption decreased over 3 times). Since transaction_object - used to track "known" transactions - no longer holds whole transaction body, when it comes to custom_json_operation state byte consumption and associated cost became negligible.

One more thing. When new consumption was calculated with old method - just at the end of transaction - some operations that didn't even report any state consumption before, like account_update_operation, suddenly started to report significant consumption. That particular operation looked too important to become exceptionally costly (even free accounts need it). Therefore new mechanism had to be introduced: differential use calculation. It is best to describe it with example. When account_update_operation just replaces old key with new one, no new state is allocated, therefore state consumption is none. However if the operation is used to extend existing authority, then all the new entries are counted and they cost a ton. The mechanism has to check state not only after operation was executed, but also before. Only a handful of operations are considered in that mechanism, just those where overcharging resulting in its absence would be significant (after all use of that mechanism comes with increased execution cost).

Comparison of fill level between old and new state byte pool. The "bump" that starts after 56M block is a lot of new accounts being created. Once the action stopped, new version of state pool recovered, because normal operations consume less state then previously.

Execution time.

Since quite some time has passed between now and when the execution time consumption of operations was measured, computers used for running nodes became faster. So the minimum change had to be to update the values. However the old execution resource pool was designed to cover time of replay, plenty of processes with long execution that are run during live sync were not accounted for. The most prominent is the time spent on calculating public keys from transaction signatures. The pool parameters were changed to focus on time spent in live sync (after all that is what determines if the nodes can keep up the timely processing of blocks). Since more processes are covered by the pool, the budget was increased. Its half-life was reduced to 1 hour (from 15 days), so it reacts on change in traffic much faster (depletes faster but also refills faster).

Calculating time spent on signatures flattened the differences between operations. There are just three operations where execution time of operation is higher than calculation of single signature - for most cases (and over 99% of transactions consist of single operation) execution time associated with signatures became dominant portion of what transaction uses out of related resource pool. In case of custom_json_operation, despite JSON parser optimization (that pretty much removed all execution time spent on the operation itself), the use of execution time resource almost doubled compared to old version where signatures were not accounted for.

Comparison of fill level between old and new execution time pool. New is more "jagged" because it reacts quicker on changes in use, also more of it is now used by transaction signatures which shows after block 56M.

Other pools.

I have not changed other pools except for new mechanism.

At the beginning there was the idea to introduce new pool that would cover Hivemind db storage separately from history bytes. In the end it was shelved, but it exposed a problem. When you wanted to add new RC pool, you had to adjust parameters of all other pools. It is because previously each pool treated global RC regeneration rate (sort of "new money" in the system) as if all that RC was to be spent only on that resource. But how to split global regen between different pools? It could be split evenly, however that would not be natural. When denim becomes new fashion trend, you can expect more money to flow into denim suppliers, while wool suppliers might need to lower their prices. The same principle was applied to RC regeneration rate - it is split between pools proportionally to resource popularity in last period (which in our case means last day - yet another arbitrary value). The mechanism makes sure popular resources can't be hogged by users of a single type of operation. F.e. use characteristic of custom_json_operation is that it uses mostly history bytes and then a bit of execution time (for signatures). There are times when there are so many such operations that they can't even fit in blocks. Eating a lot of history bytes, in the absence of mechanism in question, would mean all other operations would automatically rise in price. So comment_operation that is usually a lot bigger than custom json, would not only have hard time getting into block due to its size, but also be potentially outpriced. The new mechanism helps here. When history bytes become more popular, its pool popularity share increases, but it also means shares of other pools decrease. So comments can actually have their cost lowered during such time, because they also consume significant amount of state, which will drop in price as a result.

Introduction of the above mechanism cut the baseline price by 4, because neutral share is 25% of global regen for each pool (there are 5 pools, however pool of new account tokens is controlled by witnesses, so it was excluded from the changes). It was subsequently multiplied by 3 to compensate. Why 3 and not 4? Because I was trying to make it so overall RC consumption should stay roughly the same as it was before and value of 3 gave results closest to that goal.

The mechanism carries the most influence on final price due to how fast it changes. Let's compare it to other factors.

Global regen is directly proportional to amount of VESTs in the system and over the history of STEEM/HIVE there is really just one episode when it swung significantly (upward when exchanges used their customers' tokens to help certain individual take over STEEM governance and downward with HF23 - HIVE hardfork - and the subsequent power down from users that wanted to stay on STEEM and treated HIVE as free money to be cashed out quickly). By the way, while it steadily increases, we are still 35% below average levels from STEEM times. RC cost of operation is directly proportional to the global regen when other factors are excluded - the more global regen, the more all operations cost.

Global regen change over time.

Fill level of resource pool influences RC cost inversly, that is, when there is twice as much resource available, it costs half. There are some variations in pool parameters, but that is roughly the case. Let's see example of three transactions of the same size (the same use of history bytes = 195B) from times of different fill level of history pool. Their costs per byte normalized to common global regen are the following:

245082, fill level 93.23% - price modifier vs full = +7.26% (how much more it costs compared to baseline cost with full pool)709996, fill level 31.67% - price modifier vs full = +215.76%557007, fill level 40.58% - price modifier vs full = +146.43%

If we use that to calculate cost of the remainder of the history byte pool in each case we get:

6181Tor3090per point of global regen6082Tor3041per point of global regen6115Tor3057per point of global regen

No matter how much of the pool is already consumed, the remainder costs roughly the same, which shows inverse proportionality. What it means in practice is that the change in price can be significant with small change in fill level, but only when pool is mostly dry. On top of that, it takes a lot of time to consume or refill the pool (with exception of new execution time pool due to its very short half-life).

Fill levels of (new in case of state and exec) resource pools over time.

Finally the popularity share. Lets see an example of how fast it can influence the price - pairs of similar transactions in vastly different popularity settings:

custom_json_operationwith total cost of 162,689,977 RC (81.78% history, 0.01% state, 18.21% exec)create_claimed_account_operationwith total cost of 6,277,572,459 RC (5.61% history, 93.65% state, 0.74% exec)

Above transactions are not from the same block but pretty close. Pool fill levels were 44.61% history, 83.93% state, 57.85% exec, global regen at 1863G.

Now let's move just a bit shy of 12 days. We have another pair of almost identical transactions (the second one is actually a bit smaller than its equivalent from previous pair):

custom_json_operationwith total cost of 80,182,869 RC (83.25% history, 0.06% state, 16.69% exec)create_claimed_account_operationwith total cost of 20,435,376,407 RC (0.77% history, 99.13% state, 0.1% exec)

Pool fill levels are now 51.77% history, 75.55% state, 83.93% exec, global regen at 1871G.

Global regen is almost the same in both cases (0.4% difference), change in fill level of history pool changed its resource price modifier from +124.16% down to +93.16% (16% difference), state pool from +19.15% up to +32.36% (11% difference), exec pool from +72.86% down to +19.15% (45% difference). The changes in fill level affect the price somewhat but nowhere near enough. After all the cost of custom_json_operation dropped to less than half, in the same time the cost of create_claimed_account_operation more than trippled.

The difference comes from changes in resource popularity share. For first pair it was 58.98% / 15.01% / 24.32% (with rest on market bytes, new account tokens always use 100% share modifier), the second pair used 34.12% / 46.42% / 15.79%. It is the sharp increase in state byte popularity that trippled cost of operation with heavy use of state bytes. As you can see the share for other resources dropped as a result.

Change in popularity share over time.

Price change due to popularity share depends on how much particular operation uses resources that gain/lose popularity. F.e. comment_operation is generally heavy on state byte usage, unless it is an exceptionally long article. vote_operation is mostly history bytes, with solid 20% in exec time. custom_json_operation has similar characteristic, except while votes are all the same size, jsons can be small or big and the big ones are even more heavy on the history bytes. Transfers and similar market operations take half of their cost from market bytes.

Change in share of costs over time.

Summary.

- Social operations such as votes and comments (but also follow custom_jsons) are on average slightly less expensive. Only most recently votes became around 40% more expensive (but in the same time much more costly comments dropped by 20%)

- Market related operations, with exception of some rarely used and

withdraw_vesting_operation(execution cost now covers all steps of withdrawal) are even 50% cheaper. But they were cheap to begin with.

Account maintenance operations like

account_update_operationare on average 80% more expensive, but that heavily depends on what you do - adding more authorities is expensive, changing existing authorities is not.Governance such as voting for witnesses or updating proposals is roughly the same as before. Only creation of long lasting proposals is more expensive.

All forms of account creation became a lot more expensive, but most recently it is just two times more.

New account tokens - no change (by design).

Custom jsons (with exception of follow that were charged extra, but not anymore) became on average 40% more expensive, but most recently more like 70%. Due to popularity share of history bytes already at over 60% (and cost share of that resource at over 90%), there is not much room for further growth in price. The only event when it could still grow a lot more would be if max block size was increased and history pool potentially running dry.

Future.

One significant change is on hold, waiting for witnesses to actually allow RC to start effectively limiting average users. Currently due to max block size being only 64kB, the transaction has a lot more chance to not get to the block due to congestion than due to user not having enough RC (although there is possibility that survivorship bias is at play here - we really have no tools to observe frequency of users struggling with lack of RC). Once the max size is increased, we might see more resources being consumed, RC pools drying up and RC costs skyrocketing. If that happens, the change would be to tie budget of history and execution pool to the max size of block. But real data is needed first for analysis of such scenario.

And here I go back to RC delegations. If above indeed happens (RC costs skyrocketing), normal users, especially new ones, will be priced out. For bot operators it won't be a problem to acquire more RC delegation. But for people that just start their journey with Hive it would suddenly add another big step on the learning curve that might be too hard to deal with and discourage them.

Alternative is even worse - whales giving out free RC delegations proactively. It would mean great amount of new RAM consumption.

TL:DR

Most users are not affected simply because they were not running dry on their RC to begin with. The most affected are bots of any level of wealth and free accounts that only use custom_jsons (if you engaged in social activities your account would quickly grow out of "free" qualifier).

If your only activity is custom_json_operation, especially if it aligns with various popular events on Splinterlands, then your RC costs will rise by 50% on average, but double most recently. If your activity revolves around creating new accounts, then your RC cost will at least double, but at times it might even rise 10 times. If your activity is mixed then you won't be affected. If you mainly do social related operations then your RC costs will drop. If your activity is trading (but not with use of custom_jsons) then your RC costs will drop significantly. This is all only due to massive increase in use of custom_jsons. If situation changes, if users start to blog more, or trade more, if average custom operation gets smaller due to changes in apps, then the RC costs will adapt pretty fast.

If you have any questions regarding RC, feel free to ask in comments. I tried not to repeat too much of what was already described in articles posted by original developers of the system around HF20 but it is obvious that not everyone read them :o)