How Artificial Intelligence Works

Part 2: Neural Networks

Introduction

So in yesterday's post, we talked about genetic algorithms, one of the simplest ways that AI can learn. When we ended off, I said that there would be several questions about AI, one of them being 'How do AI think?'. To understand what neural networks are, and how they work, we're first going to have to learn a little about what this model was based off of, the brain.

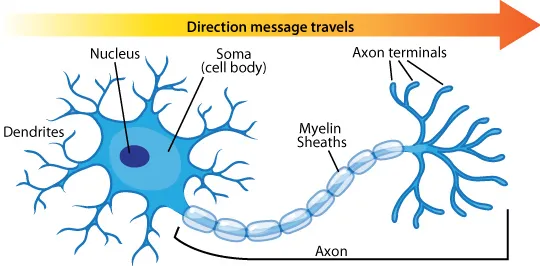

Neurons have 2 main parts that we care about. The first is the dendrites, which take in information, and the axons, which release information to other neurons. Neurons also have different strength connections, where the stronger the connection, the stronger the signal that gets through.

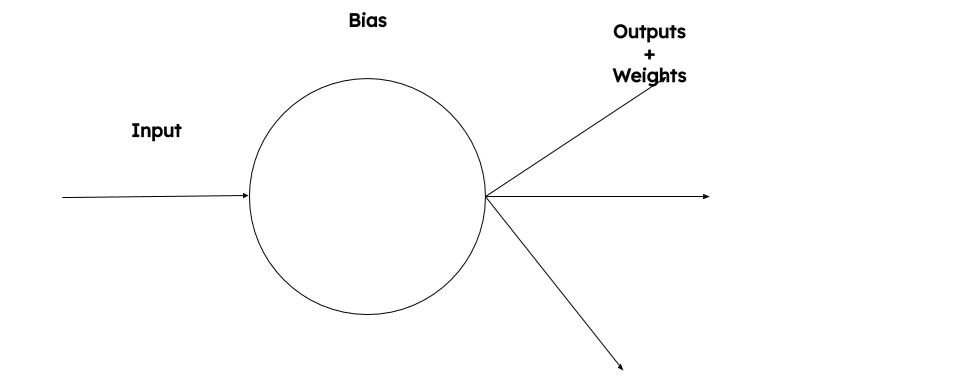

Now let's think about this. We're trying to create a type of 'brain' that can learn from experience. First, we'd have to design the 'neurons'. That's what Frank Rosenblatt did in 1958, calling it, the perceptron. The perceptron is pretty simple.

It takes in an input, typically a number between 0 and 1. It then adds a 'bias' to said number (the bias basically makes it easier or more difficult for the 'signal' to get through). It then outputs that number to the other neurons around it. If you can't tell this is similar to and based off of how neurons work.

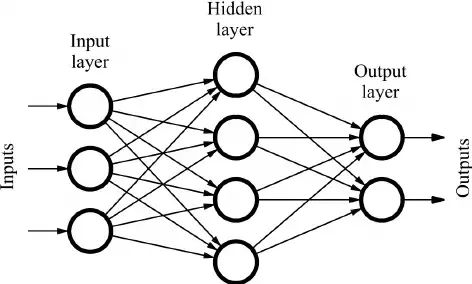

One neuron/perceptron, of course, can not learn by itself, it needs to have something multiple neurons that feed into each other. There are many, many models for doing this, but the one we're going to focus on is called a feedforward neural network (you may also hear it be called a multilayer perceptron). The actual concept of it, while complicated at first, when you break it down, is just math.

When you combine many perceptrons together, you can get a network that can learn how to perform complex tasks. For example, let's say you're working on an AI that can tell the difference between dogs and cats. The only data you want to give it is the weight, the size, and the height of the animal. Those three numbers would first go into the input layer, the layer of the AI that gets, well, the input. It's like the eyes and ears of the network. This layer does not get a bias. While the number sends to the hidden neurons, however, they're multiplied by the weight. This is like the signal strength of the connection between the input and the hidden. For example, if you were sending 0.8 form the input layer to the hidden, and the weight was 0.95, you'd simply multiply the two numbers together, to get 0.76. Basically, the larger the number the weight has, the 'more important' the number is.

Next, you would send that data to the hidden layer, or the hidden neurons. These are the meat of the neural network, they are like the perceptrons we discussed earlier. They take the sum of all of the inputs * the weights, and then add the bias to it. You may be asking yourself, well you said the numbers should be between 0 and 1.this is absolutely true, and there are two parts to doing thid. The first is called the 'activation function '. This basically squished out sum down between 0 and 1. Now there are many activation functions, each with their own pros and cons, but for now we're going to focus on the Sigmoid function.

The Sigmoid function ,(called that because of it's S shape) takes a number, and squishes it to be within 0 and 1. If you looked carefully, you may have noticed that when the numbers are above 6 or below -6, it's basically equal to 1 and 0 respectively. This is a problem, since we want the neuron to only be lit up when , say, the inputs sum * weights is >5. This is where the bias comes in. The bias is simply a positive or negative number that way individual hidden neuron has, that's added to the sum before getting put into the Sigmoid function.

Finally, all of this data is added together each individual output neuron, Sigmoid squished, and then finally, we have a fully functioning neural network, or brain.

Great, you may be wondering. I have the 'brain', and I know one method making it learn, how do I combine the two. This, we'll be learning in my next post, where I show how you could set this up. I'm currently working on a JavaScript implementation of this, and if I can't,t finish it within a few days, I'll probably create a pseudocode example instead. And if you're not a programmer, don't let the word pseudocode scare you! It's just code that's !ore simplified so that it's easier to understand and implement into any programming language. And if you do know how to program, knowing the basics of object_oriented programming will be useful if you want to dive into !y code, but is not necessary.

If you have any questions, comments or criticisms, please let me know! I'm learning along all of you. Thanks for reading :)

(By the way, I'd you want to check my current with the code, or even help a little, my GitHub is billyb2)

Sources: