Experts warn that actions must be taken in efforts to control artificial intelligence from spiraling out of control.

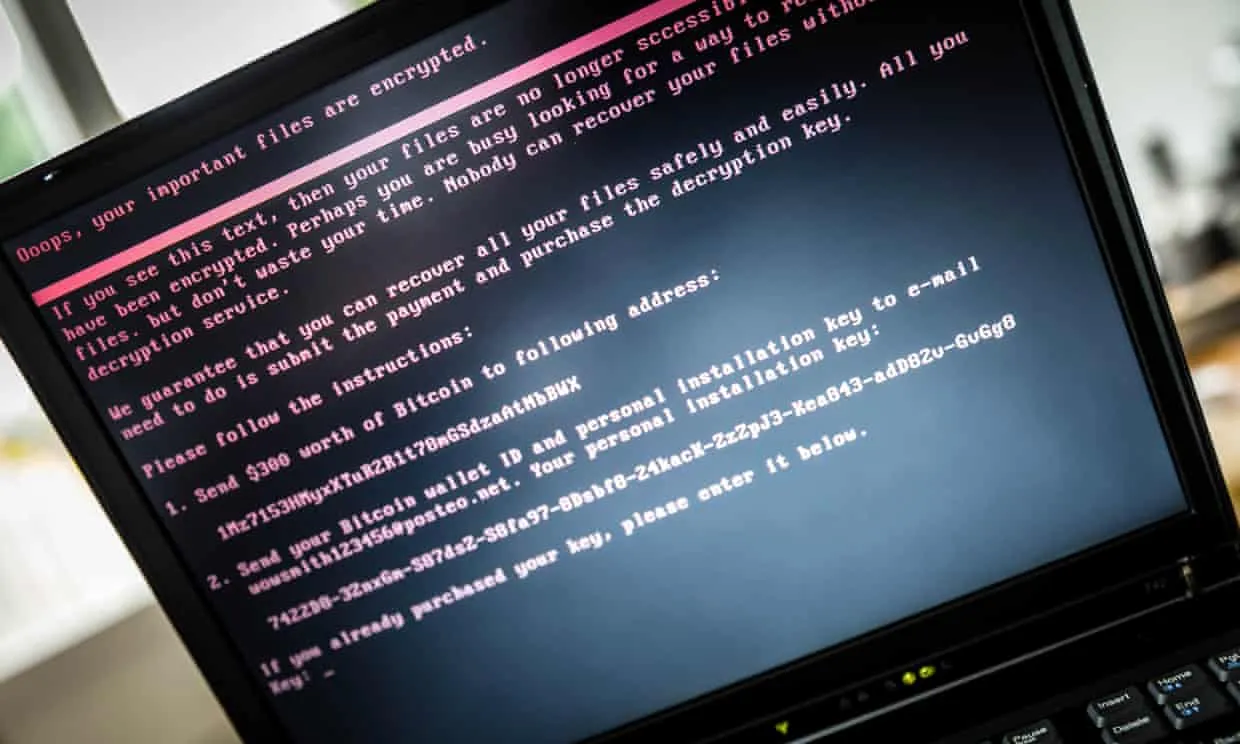

AI may prove to play an increasingly large role in cybercrime and nefarious activities.

In an age of artificial intelligence technologies there will certainly be cases of it being used for good as well as bad.

The question we have to ask ourselves is what applications should this new and revolutionary technology be used for

could enable new forms of cybercrime, political disruption and even physical attacks within five years, a group of 26 experts from around the world have warned.

In a new report, the academic, industry and the charitable sector experts, describe AI as a “dual use technology” with potential military and civilian uses, akin to nuclear power, explosives and hacking tools.

“As AI capabilities become more powerful and widespread, we expect the growing use of AI systems to lead to the expansion of existing threats, the introduction of new threats and a change to the typical character of threats,” the report says.

They argue that researchers need to consider potential misuse of AI far earlier in the course of their studies than they do at present, and work to create appropriate regulatory frameworks to prevent malicious uses of AI.

If the advice is not followed, the report warns that AI is likely to revolutionise the power of bad actors to threaten everyday life. Beyond that, AI could be used to lower the barrier to entry for carrying out damaging hacking attacks.

Can you believe a world where the discovery of critical software bugs has become automated? Or a world in which malicious AI rapidly selects potential victims for financial crime?

This new approach may even be used to abuse Facebook-style algorithmic profiling to create “social engineering” attacks designed to maximise the likelihood that a user will click on a malicious link or download an infected attachment. Talk about creepy right?

Image Source: Rob Engelaar/EPA

The increasing influence of AI on the physical world is also increasing the likelihood for AI misuse. One interesting example previously discussed is the idea of weaponising “drone swarms." They could be fitted with small explosives and self-driving technology and then set loose to carry out untraceable assassinations. They have been called “slaughterbots" and its something right out of a science fiction novel!

Want to get a good look at what I'm talking about, check out the video below!

Beyond the potential for drone swarms creating a leathal and hostile environment for everyday people the potential for AI playing a role in political disruption also very plausible.

Nation states may decide to use automated surveillance platforms to suppress dissent – as is already the case in China, particularly for the Uighur people in the nation’s northwest.

Others may create “automated, hyper-personalised disinformation campaigns”, targeting every individual voter with a distinct set of lies designed to influence their behaviour.

What kind of a world are we going to leave for our children? This is the question that needs to be asked when we consider how much activism and research in needed in controlling the direction of AI development. I don't know about you but I find the fact that China is already using AI for the nefarious use of suppressing dissent as a preview of what is to come unless we as a people can get our hands dirty in managing this AI rollout.

Beyond some of the cases meant for above its also possible for AI to increase the ability of bad actors to perform “denial-of-information attacks." Through the use of sophisticated AI systems, it will be possible to generate so many convincing fake news stories that legitimate information becomes almost impossible to discern from the the disinformation campaigns that bad actors will be able to implement.

Seán Ó hÉigeartaigh is a researcher at the University of Cambridge’s centre for the study of existential risk. When asked about the potential for AI to reap havoc on the masses he said: “We live in a world that could become fraught with day-to-day hazards from the misuse of AI and we need to take ownership of the problems – because the risks are real. There are choices that we need to make now, and our report is a call-to-action for governments, institutions and individuals across the globe."

I would tend to agree with Sean in his belief that the risks that AI will be misused are very real. This isn't paranoia this is a look at the greed and power lust that drives many human beings to do unspeakable things on a day to day basis.

It is important to find a balanced way to handle the rollout of AI into the real world and I don't think taking a stance completely against AI is the right way to go about things either. There is certainly a lot of potential good that can come out of developing this technology and using it to fix our world's problems.

To further prove my point here, it seems clear that not everyone is convinced that AI poses such a risk. For example, Dmitri Alperovitch, the co-founder of information security firm CrowdStrike, said: “I am not of the view that the sky is going to come down and the earth open up."

“There are going to be improvements on both sides; this is an ongoing arms race. AI is going to be extremely beneficial, and already is, to the field of cybersecurity. It’s also going to be beneficial to criminals. It remains to be seen which side is going to benefit from it more."

“My prediction is it’s going to be more beneficial to the defensive side, because where AI shines is in massive data collection, which applies more to the defence than offence.”

I think there are a lot of valid points made by Dmitiri here. The sky probably won't come down and the earth won't open up. As AI gets more sophisticated things will change and society will be restructured but for the most part life will go on. He sees AI as a fantastic tool to create a safer and more secure world. His points about AI being more useful to defense than offence is quite interesting. I'm not sure I completely agree with that point but it does seem to have merit.

In conclusion, from the research I've conducted around this topic, it does seem to hold constant that AI will be the best defence against AI. The world has entered a new age in which nuclear arms have been replaced by cyberwarfare and the race to create superior AI platforms is on both privately and publicly.

There is a lot of potential for AI to completely transform our world in positive ways assuming the people in charge of their design and implementation have good intentions in mind. I certainly won't be losing sleep over this issue but I will also be keeping a close eye on this topic in the news over the next few months as I do believe we are going to see some major breakthroughs in 2018.

What does the ADSactly community think about AI and its potential for increased cyberwarfare and cybercrime?

Do you see AI as more of a tool for good or evil? Do you have any examples of AI already being used in our world to improve the quality of life for people?

Here's a chance for the Steemit community to leave their thoughts and opinions on this topic!

Thanks for reading.

Authored by: @techblogger

In-text citations sources:

"Growth of AI could boost cybercrime and security threats, report warns" - The Guardian

Image Sources: The Guardian, Pexels